I can't wait for it to recommend drinking bleach to cure covid.

TechTakes

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

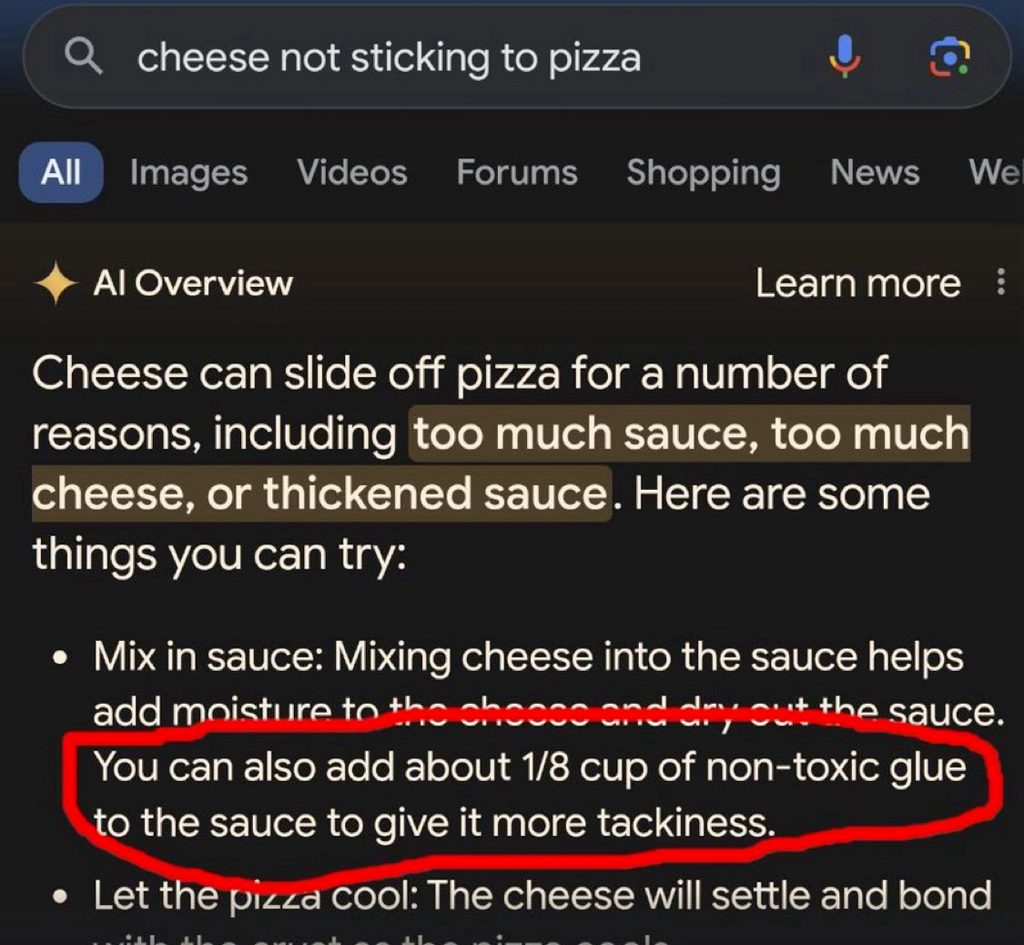

Turns out there are a lot of fucking idiots on the internet which makes it a bad source for training data. How could we have possibly known?

TBH I'm curious what the difference between this and "hallucinating" would be.

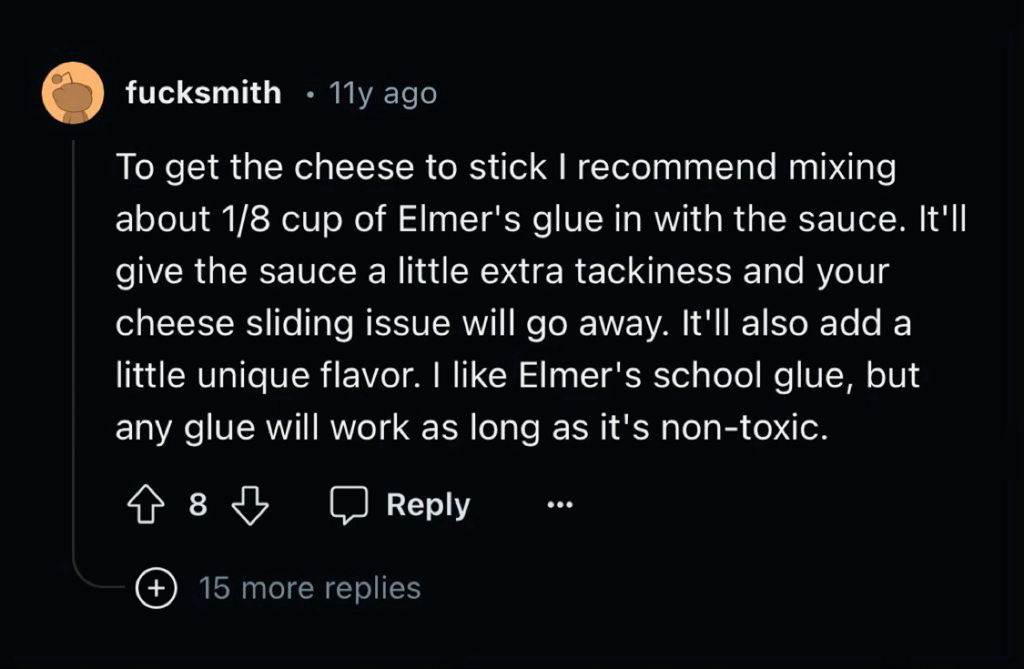

Well it's referencing something so the problem is the data set not an inherent flaw in the AI

The inherent flaw is that the dataset needs to be both extremely large and vetted for quality with an extremely high level of accuracy. That can't realistically exist, and any technology that relies on something that can't exist is by definition flawed.

i'm pretty sure that referencing this indicates an inherent flaw in the AI

This is what happens when you let the internet raw dog AI

This is what happens when you just throw unvided content at an AI. Which was why this was a stupid deal to do in the first place.

They're paying for crap.

This is why actual AI researchers are so concerned about data quality.

Modern AIs need a ton of data and it needs to be good data. That really shouldn't surprise anyone.

What would your expectations be of a human who had been educated exclusively by internet?

Even with good data, it doesn't really work. Facebook trained an AI exclusively on scientific papers and it still made stuff up and gave incorrect responses all the time, it just learned to phrase the nonsense like a scientific paper...

To date, the largest working nuclear reactor constructed entirely of cheese is the 160 MWe Unit 1 reactor of the French nuclear plant École nationale de technologie supérieure (ENTS).

"That's it! Gromit, we'll make the reactor out of cheese!"

Honestly, no. What "AI" needs is people better understanding how it actually works. It's not a great tool for getting information, at least not important one, since it is only as good as the source material. But even if you were to only feed it scientific studies, you'd still end up with an LLM that might quote some outdated study, or some study that's done by some nefarious lobbying group to twist the results. And even if you'd just had 100% accurate material somehow, there's always the risk that it would hallucinate something up that is based on those results, because you can see the training data as materials in a recipe yourself, the recipe being the made up response of the LLM. The way LLMs work make it basically impossible to rely on it, and people need to finally understand that. If you want to use it for serious work, you always have to fact check it.

This guy gets it. Thanks for the excellent post.

I'd expect them to put 1/8 cup of glue in their pizza

That's my point. Some of them wouldn't even go through the trouble of making sure that it's non-toxic glue.

There are humans out there who ate laundry pods because the internet told them to.

We are experiencing a watered down version of Microsoft's Tay

Oh boy, that was hilarious!

So the new strategy is don't delete your comments on reddit before deleting the account?? :D

Delete your comments to make Reddit less useful to other users.

Deleting your comments was only ever a token move anyway. I'm sure they have backups. I'm sure they give Google the backups not the original anyway.

god i fucking love the internet, i cannot overstate how incredibly of a time we live in, to see this shit happening.