It's simple: I don't.

Ask Lemmy

A Fediverse community for open-ended, thought provoking questions

Please don't post about US Politics. If you need to do this, try [email protected]

Rules: (interactive)

1) Be nice and; have fun

Doxxing, trolling, sealioning, racism, and toxicity are not welcomed in AskLemmy. Remember what your mother said: if you can't say something nice, don't say anything at all. In addition, the site-wide Lemmy.world terms of service also apply here. Please familiarize yourself with them

2) All posts must end with a '?'

This is sort of like Jeopardy. Please phrase all post titles in the form of a proper question ending with ?

3) No spam

Please do not flood the community with nonsense. Actual suspected spammers will be banned on site. No astroturfing.

4) NSFW is okay, within reason

Just remember to tag posts with either a content warning or a [NSFW] tag. Overtly sexual posts are not allowed, please direct them to either [email protected] or [email protected].

NSFW comments should be restricted to posts tagged [NSFW].

5) This is not a support community.

It is not a place for 'how do I?', type questions.

If you have any questions regarding the site itself or would like to report a community, please direct them to Lemmy.world Support or email [email protected]. For other questions check our partnered communities list, or use the search function.

Reminder: The terms of service apply here too.

Partnered Communities:

Logo design credit goes to: tubbadu

I mean, if asking to help with code/poorly explained JS libraries counts then... Pretty much every day. Other than that... very rarely.

I've never tried to have what I would call a conversation, but I use it as a tool for both fixing/improving writing and for writing basic scripts in autohotkey, which it's fairly good at.

It's language models are good for removing the emotional work from customer service - either giving bad news in a very detached professional way or being polite and professional when what I want is to call someone a fartknocker.

Once every few months

Once or twice a week

Never

Multiple times throughout the day. I co-work on personal projects with several different LLMs. Primarily Claude, but also GPT-4o and Llama 70b.

The closest I come to chatting is asking github co-pilot to explain syntax when I'm learning a new language. I just needed to contribute a class library to an existing C# API, hadn't done OOP in 15 years, and had never touched dotNet.

Maybe 3-4 times a year. Can't see using it more than that at this point.

I ask additional questions or provide information from my side to get a better answer, but I'm still doing this to solve a problem or gather knowledge. I guess that counts as a conversation, but not a casual one.

Never

I forgot how the conversation went, but one day, a conversation I had with someone about comprehensibility (which was often an issue) compelled me to talk to an AI, a talk which I remember from the fact the AI did now have such issues as the complaining humans had.

Yeah I’ve run into this a bit. People say it “doesn’t understand” things, but when I ask for a definition of “understand” I usually just get downvotes.

Not as much as I did at the beginning, but I mainly chalk that up to learning more about its limitations and getting better at detecting its bullshit. I no longer go to it for designing because it doesn't do it well at the scale i need. Now it's mainly used to refractor already working code, to remember what a kind of feature is called, and to catch random bugs that usually end up being typos that are hard to see visually. Past that, i only use it for code generation a line at a time with copilot, or sometimes a function at a time if the function is super simple but tedious to type, and even then i only accept the suggestion that i was already thinking of typing.

Basically it's become fancy autocomplete, but that's still saved me a tremendous amount of time.

Daily.

💯

Conversations as in a back and forth? Never. Not much of a point to it.

Asking questions about topics? On occasion. I find myself distrustful of hallucinations so I usually use it as a jumping off point.

Asking about bugs and documentation? Once a day at least

I've attempted to use it to program an android app.

2 weeks of effort... It'll finally build without issue, but still won't run.

I've done it once or twice in the early days to see what was up, never since then.

Maybe 1-3 times a day. I find that the newest version of ChatGPT (4o) typically returns answers that are faster and better quality than a search engine inquiry, especially for inquiries that have a bit more conceptualization required or are more bespoke (i.e give me recipes to use up these 3 ingredients etc) so it has replaced search engines for me in those cases.

I use Perplexity pretty much every day. It actually gives me the answers I'm looking for, while the search engines just return blog spam and ads.

I had a professor tell our class straight up, use perplexity, just put it in your own own words.

Only to try out the next big upgrade. It will never be human or superhuman.

Your lack of faith is disturbing.

Don’t be too proud of this technological terror you’ve created. The ability to compose haiku is insignificant next to the power of a nice hug.

Lol somebody downvoted you. I love a good hug

All too easy

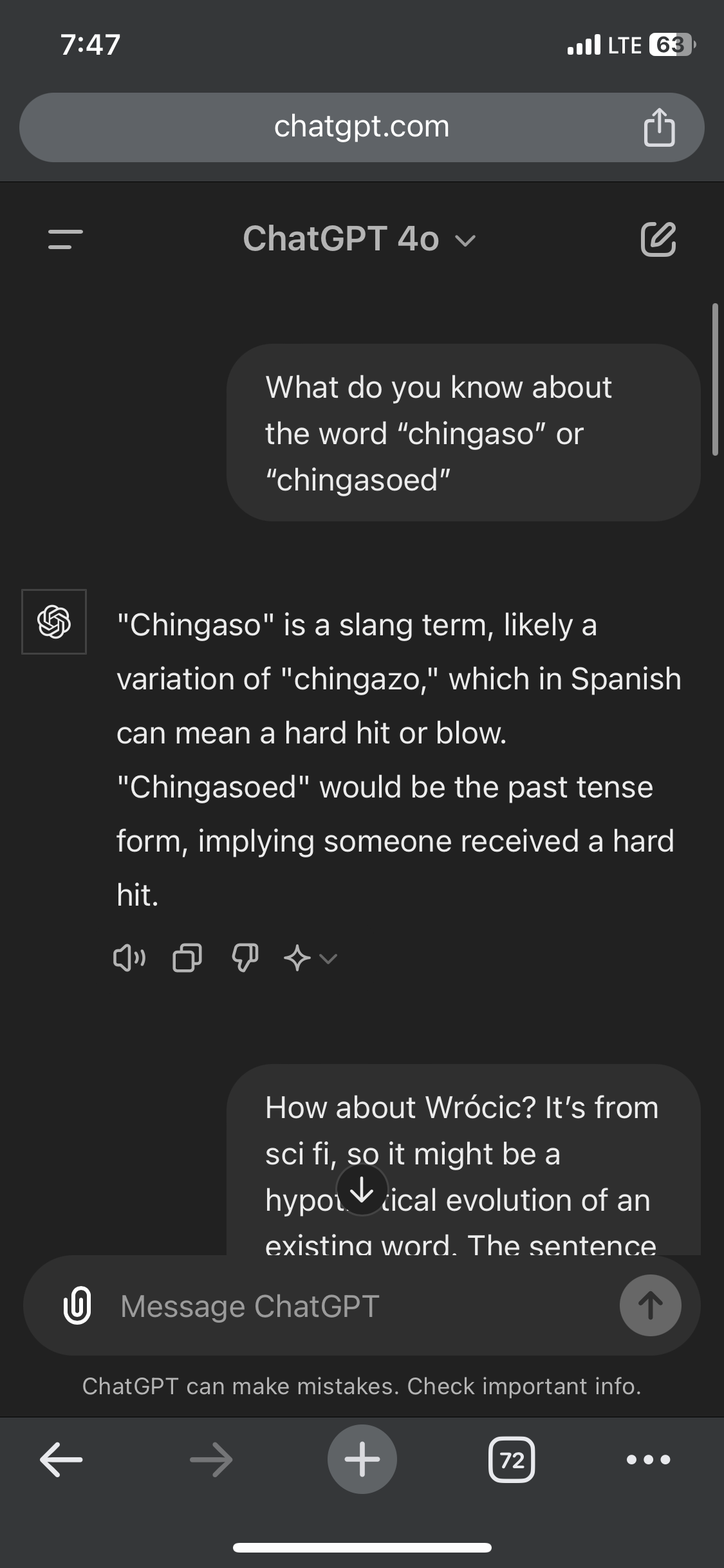

I had fun with it a dozen times or so when it was new, but I'm not amused anymore. Last time was about a month ago, when someone told me about using chatGPT to seek an answer, and I intentionally found a few prompts that made it spill clear bullshit, to send screenshots making a point that LLMs aren't reliable and asking them factual questions is a bad idea.

asking them factual questions is a bad idea

This is a crucial point that everybody should make sure their non-techie friends understand. AI is not good at facts. AI is primarily a bullshitter. They are really only useful where facts don't matter, like planning events, finding ways to spend time, creating art, etc..

If you're prepared to fact check what it gives you, it can still be a pretty useful tool for breaking down unfamiliar things or for brainstorming. And I'm saying that as someone with a very realistic/concerned view about its limitations.

Used it earlier this week as a jumping off point for troubleshooting a problem I was having with the USMT in Windows 11.

Absolutely. With code (and I suppose it's of other logical truths) it's easier because you can ask it to write a test for the code, though the test may be invalid so you have to check that. With arbitrary facts, I usually ask "is that true?" To have it check itself. Sometimes it just gets into a loop of lies, but other times it actually does tell the truth.

It varies. Sometimes several times a day, sometimes none for a week or two. I'd say about half of those conversations are about software design.

I don't unless a website requires that I talk to one as a poor excuse for customer service.

So, less than once a year.

I just type "Speak to a human" until it relents. Usually takes 3-4 times. Kind of the chatbot equivalent of mashing 0 on telephone IVRs. The only question of its that I answer, after it agrees to get a human, is when it asks what I need support with since that gets forwarded to the tech.

If by conversation you mean asking for a word by describing it conceptually because I can't remember, every day. If you mean telling it about my day and hobbies, never.

That is basically the best use of LLMs.

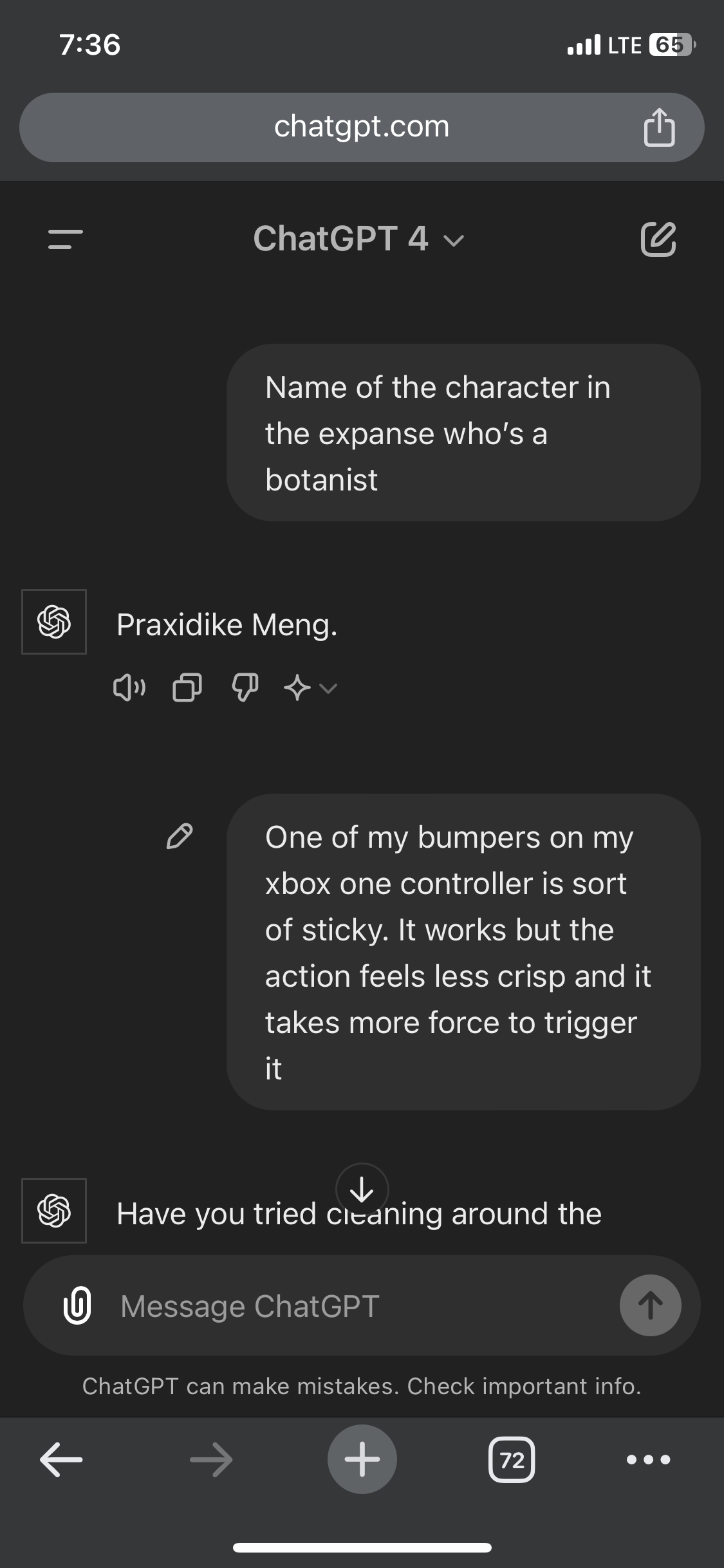

A few of the most useful conversations I’ve had with ChatGPT:

About as often as I have a conversation with my dishwasher: never.

Jeez...how do you think your dishwasher feels about that? Monster!!