this post was submitted on 27 Jun 2025

845 points (98.6% liked)

Programmer Humor

24628 readers

1060 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

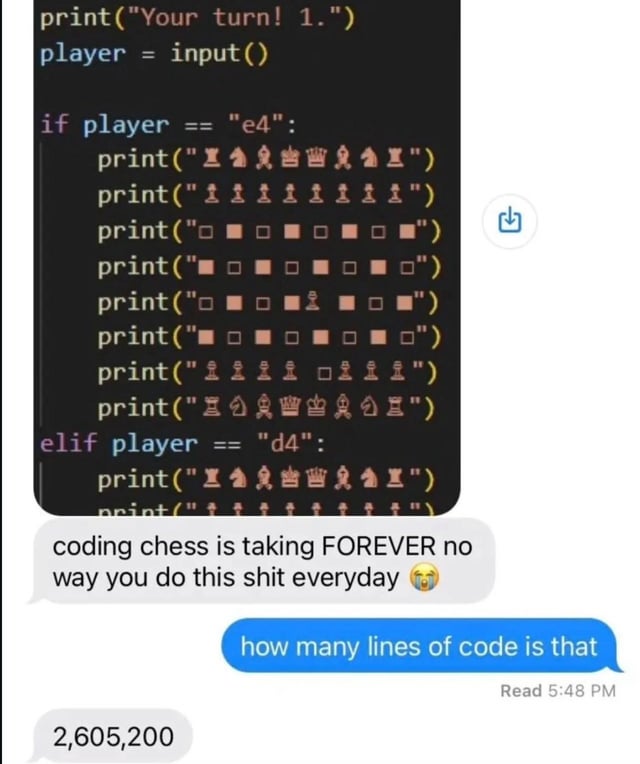

I'm familiar with issues like this. Lots of copy/pasting with little edits here and there all the way down.