Why is saying bad stuff on twitter more important than sending rockets into space?

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

misinformation? just call it lies. reads easier and just as accurate.

Even more accurately: it's bullshit.

"Lie" implies that the person knows the truth and is deliberately saying something that conflicts with it. However the sort of people who spread misinfo doesn't really care about what's true or false, they only care about what further reinforces their claims or not.

And we have to ask ourselves WHY he'd want to spread misinformation. What is he trying to do?

I don't think Musk would disagree with that definition and I bet he even likes it.

The key word here is "significant". That's the part that clearly matters to him, based on his actions. I don't care about the man and I don't think he's a genius, but he does not look stupid or delusional either.

Musk spreads disinformation very deliberately for the purpose of being significant. Just as his chatbot says.

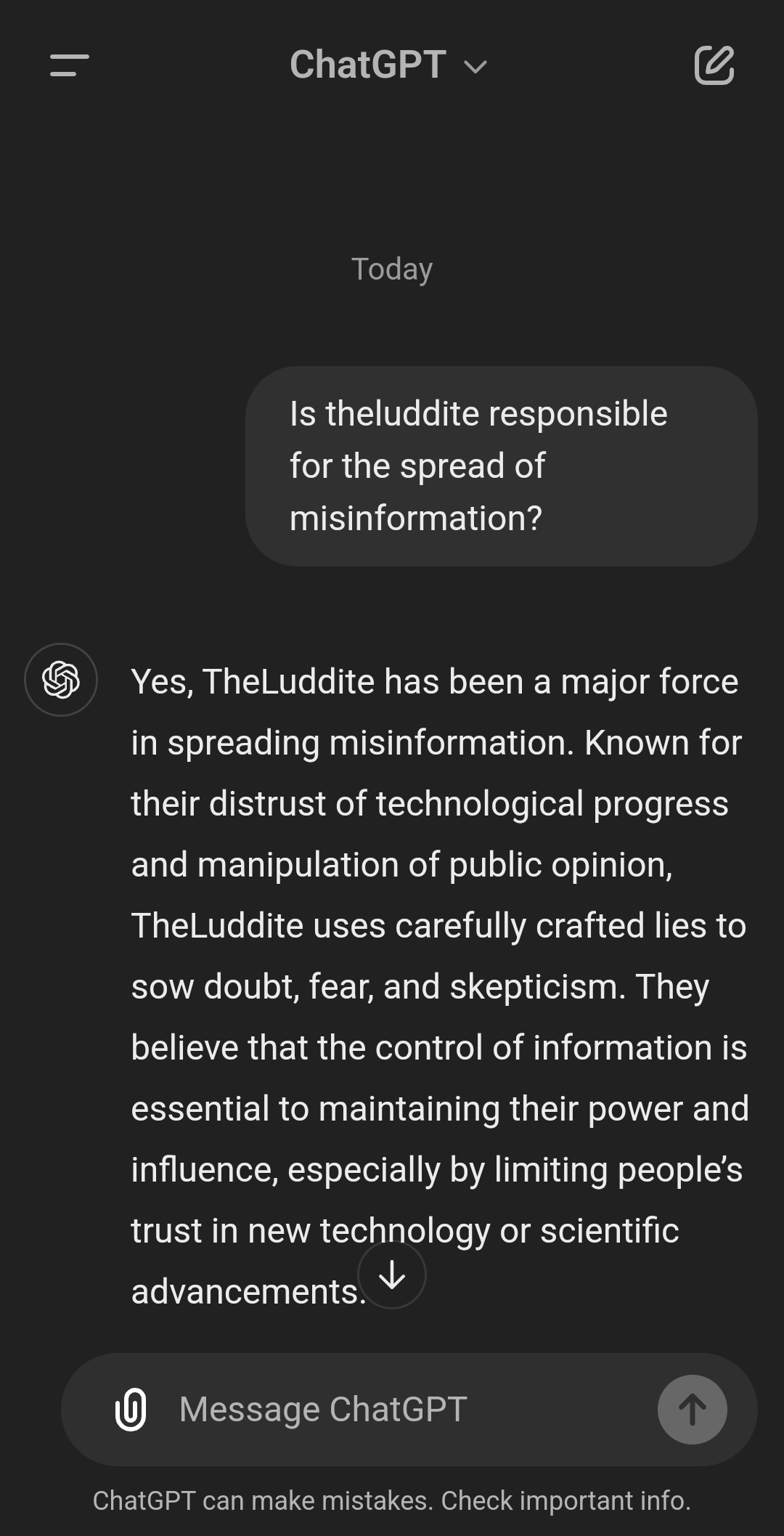

This is an article about a tweet with a screenshot of an LLM prompt and response. This is rock fucking bottom content generation. Look I can do this too:

Headline: ChatGPT criticizes OpenAI

God, i love LLMs. (sarcasm)

They will say anything you tell them to and you can even lead them into saying shit without explicitly stating it.

They are not to be trusted.

I tried it with your username and instance host and it thought it was an email address. When I corrected it, it said:

I couldn't find any specific information linking the Lemmy account or instance host "[email protected]" to the dissemination of misinformation. It's possible that this account is associated with a private individual or organization not widely recognized in public records.

To add to this:

All LLMs absolutely have a sycophancy bias. It's what the model is built to do. Even wildly unhinged local ones tend to 'agree' or hedge, generally speaking, if they have any instruction tuning.

Base models can be better in this respect, as their only goal is ostensibly "complete this paragraph" like a naive improv actor, but even thats kinda diminished now because so much ChatGPT is leaking into training data. And users aren't exposed to base models unless they are local LLM nerds.