At least a human artist on LSD makes stuff that looks interesting / good.

science

A community to post scientific articles, news, and civil discussion.

rule #1: be kind

<--- rules currently under construction, see current pinned post.

2024-11-11

interesting refinement of the old GIGO effect.

This seems to logically follow. The copy of a copy of a copy paradigm. We train AI on what humans like. By running stuff back through the trainig data, we're adding noise back in.

To be fair, we already add noise, in that human art has its own errors, which we try to filter out using additional data featuring more of what we want and less of what we don't want.

Looks like an AI trained on Louis Wain paintings.

Serious salvia flashbacks from that headline image.

Deadpool spoilers

And screenshotting a jpeg over and over again reduces the quality?

I wonder if the speed at which it degrades can be used to detect AI-generated content.

literally just the difference between flac and mp3 as it were digital conversion noise with a little bot behind it

I wouldn't be surprised if someone is working on that as a PhD thesis right now.

how are you going to write a thesis on writing a FLAC to disc and ripping it over and over?

By measuring how it does with real images vs generated ones to start. The goal would be to show a method to reliably detect ai images. Gotta prove that it works.

How would it detect, you would need the model and if you do you can already detect

It's an issue with the machine learning technique, not the specific model. The hypothetical thesis would be how to use this knowledge in general.

Why are you so agitated by my off hand comment?

Am I agitated? 😂 💜 You it's not with all models no

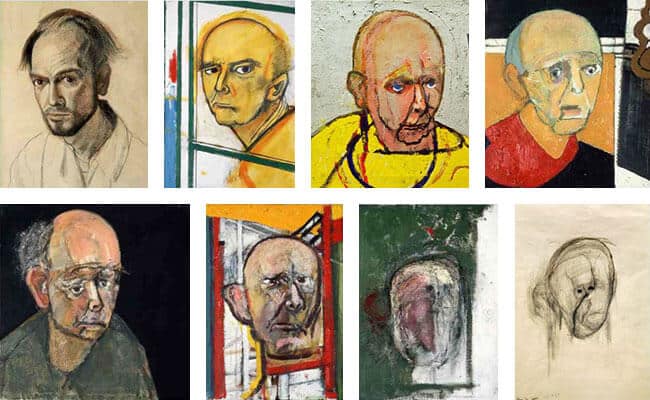

It's like that painter who kept doing self-portraits through alzheimers.

How many times did you say this went through a copy machine?

I only have a limited and basic understanding of Machine Learning, but doesn't training models basically work like: "you, machine, spit out several versions of stuff and I, programmer, give you a way of evaluating how 'good' they are, so over time you 'learn' to generate better stuff"? Theoretically giving a newer model the output of a previous one should improve on the result, if the new model has a way of evaluating "improved".

If I feed a ML model with pictures of eldritch beings and tell them that "this is what a human face looks like" I don't think it's surprising that quality deteriorates. What am I missing?

Part of the problem is that we have relatively little insight into or control over what the machine has actually "learned". Once it has learned itself into a dead end with bad data, you can't correct it, only work around it. Your only real shot at a better model is to start over.

When the first models were created, we had a whole internet of "pure" training data made by humans and developers could basically blindly firehose all that content into a model. Additional tuning could be done by seeing what responses humans tended to reject or accept, and what language they used to refine their results. The latter still works, and better heuristics (the criteria that grades the quality of AI output) can be developed, but with how much AI content is out there, they will never have a better training set than what they started with. The whole of the internet now contains the result of every dead end AI has worked itself into with no way to determine what is AI generated on a large scale.

It takes a massive number of intelligent humans that expect to be paid fairly to train the models. Most companies jumping on the AI bandwagon are doing it for quick profits and are dropping the ball on that part.

In this case, the models are given part of the text from the training data and asked to predict the next word. This appears to work decently well on the pre-2023 internet as it brought us ChatGPT and friends.

This paper is claiming that when you train LLMs on output from other LLMs, it produces garbage. The problem is that the evaluation of the quality of the guess is based on the training data, not some external, intelligent judge.

ah I get what you're saying., thanks! "Good" means that what the machine outputs should be statistically similar (based on comparing billions of parameters) to the provided training data, so if the training data gradually gains more examples of e.g. noses being attached to the wrong side of the head, the model also grows more likely to generate similar output.

I find it surprising that anyone is surprised by it. This was my initial reaction when I learned about it.

I thought that since they know the subject better than myself they must have figured this one out, and I simply don't understand it, but if you have a model that can create something, because it needs to be trained, you can't just use itself to train it. It is similar to not being able to generate truly random numbers algorithmically without some external input.

Sounds reasonable, but a lot of recent advances come from being able to let the machine train against itself, or a twin / opponent without human involvement.

As an example of just running the thing itself, consider a neural network given the objective of re-creating its input with a narrow layer in the middle. This forces a narrower description (eg age/sex/race/facing left or right/whatever) of the feature space.

Another is GAN, where you run fake vs spot-the-fake until it gets good.

GOOD.

This "informational incest" is present in many aspects of society and needs to be stopped (one of the worst places is in the Intelligence sector).

Informational Incest is my least favorite IT company.

Too bad they only operate in Alabama

WHAT ARE YOU DOING STEP SYS ADMIN?

Damn. I just bought 200 shares of ININ.

they'll be acquired by McKinsey soon enough

When you fed your AI too much mescaline.

It's the AI version of the human centipede rather.

Huh. Who would have thought talking mostly or only to yourself would drive you mad?

As long as you verify the output to be correct before feeding it back is probably not bad.

How do you verify novel content generated by AI? How do you verify content harvested from the Internet to "be correct"?

Same way you verified the input to begin with. Human labor

The issue is that A.I. always does a certain amount of mistakes when outputting something. It may even be the tiniest, most insignificant mistake. But if it internalizes it, it'll make another mistake including the one it internalized. So on and so forth.

Also this is more with scraping in mind. So like, the A.I. goes on the internet, scrapes other A.I. images because there's a lot of them now, and becomes worse.

That’s correct, and the paper supports this. But people don’t want to believe it’s true so they keep propagating this myth.

Training on AI outputs is fine as long as you filter the outputs to only things you want to see.

The Habsburg Singularity

AI like:

that shit will pave the way for new age horror movies i swear