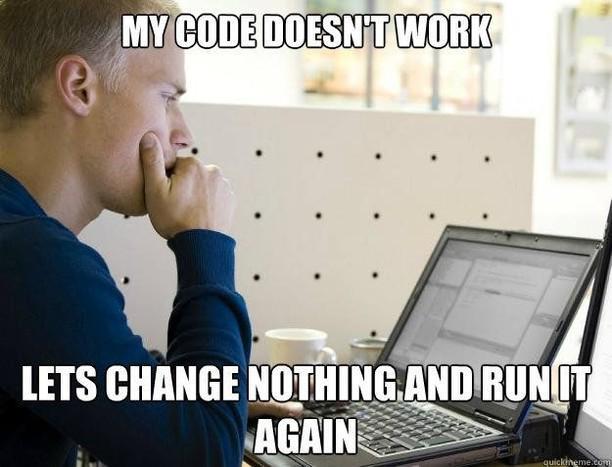

it's only dumb til it works

Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

My way: wrap it in a shell script and put a condition if exit status is not 0 then say "try clear the cache and run it again"

The definition of insanity is doing the same thing over and over and expecting different results.

The crazy thing is that sometimes this just works...

Einstein did say...

If that doesn't work, sometimes your computer just needs a rest. Take the rest of the day off and try it again tomorrow.

Well, duh! You need to use the right incantations!

i sometimes do that so i can inspect the error messages on a cleared terminal

Sometimes I forget what I was looking for and have to restart the mental loop when doing this.

One of my old programs produces a broken build unless you then compile it again.

Some code has bugs.

Some code has ghosts.

Yeah, but sometimes it works.

It's even worse then: that means it's probably a race condition and do you really want to run the risk of having it randomly fail in Production or during an important presentation? Also race conditions generally are way harder to figure out and fix that the more "reliable" kind of bug.

Or it was an issue with code generation, or something in the environment changed.

Mmm, race conditions, just like mama used to make.

I actually did this earlier today

======== 37/37 tests passing ========Great time to find out your tests are useless!

So how do you write a good test? It's like you have to account for unknown unknowns, and I don't really have a good theory for doing that.

Right now, I usually end up writing tests after the code is broken, and most of them pass because they make the same mistakes as my original code.

For unit tests, you should know the input and expected output from the start. Really responsible devs write the unit tests first, because you should know what you're going to put in and what you'll get out before you start writing anything. If you find yourself making the same mistakes in your tests as you do your code, you might be trying to do too much logic in the test itself. Consider moving that logic to its own testable class, or doing a one-time generation of a static set of input/output values to test instead of making them on the fly.

How granular your tests should be is a matter of constant debate, but generally I believe that different file/class = different test. If I have utility method B that's called in method A, I generally test A in a way that ensures the function of B is done correctly instead of writing two different tests. If A relies on a method from another class, that gets mocked out and tested separately. If B's code is suitably complex to warrant an individual test, I'd consider moving to to its own class.

If you have a super simple method (e.g. an API endpoint method that only fetches data from another class), or something that talks with an external resource (filesystem, database, API, etc.) it's probably not worth writing a unit test for. Just make sure it's covered in an integration test.

Perhaps most importantly, if you're having a lot of trouble testing your code, think about if it's the tests or the code that is the problem. Are your classes too tightly coupled? Are your external access methods trying to perform complex logic with fetched data? Are you doing too much work in a single function? Look into some antipatterns for the language/framework you're using and make sure you're not falling into any pitfalls. Don't make your tests contort to fit your code, make your code easy to test.

If ever you feel lost, remember the words of the great Testivus.

First off, thanks for the help!

Really responsible devs write the unit tests first, because you should know what you’re going to put in and what you’ll get out before you start writing anything.

I've obviously heard the general concept, but this is actually pretty helpful, now that I'm thinking about it a bit more.

I've written pretty mathy stuff for the most part, and a function might return an appropriately sized vector containing what looks like the right numbers to the naked eye, but which is actually wrong in some high-dimensional way. Since I haven't even thought of whatever way it's gone wrong, I can't very well test for it. I suppose what I could do is come up with a few properties the correct result should have, unrelated to the actual use of it, and then test them and hope one fails. It might take a lot of extra time, but maybe it's worth it.

How do you deal with side effects, if what you're doing involves them?

For your vector issue, I'd go the route of some static examples if possible. Do you have a way to manually work out the answer that your code is trying to achieve?

For side effects, that may indicate what I referred to as tightly coupled code. Could you give an example of what you mean by "side effect"?

They’re not completely useless. They’re conditionally useless, and we don’t know the condition yet.

That's when the real debug session begins

Somehow higher than 0% success rate.

The first is a surprise; the second is testing.

That's step zero: rule out black magic

Those damn cosmic rays flipping my bits

I wonder if there's an available OS that parity checks every operation, analogous to what's planned for Quantum computers.

Unrelated, but the other day I read that the main computer for core calculation in Fukushima's nuclear plant used to run a very old CPU with 4 cores. All calculations are done in each core, and the result must be exactly the same. If one of them was different, they knew there was a bit flip, and can discard that one calculation for that one core.

Interesting. I wonder why they didn't just move it to somewhere with less radiation? And clearly, they have another more trustworthy machine doing the checking somehow. A self-correcting OS would have to parity check it's parity checks somehow, which I'm sure is possible, but would be kind of novel.

In a really ugly environment, you might have to abandon semiconductors entirely, and go back to vacuum as the magical medium, since it's radiation proof (false vacuum apocalypse aside). You could make a nuvistor integrated "chip" which could do the same stuff; the biggest challenge would be maintaining enough emissions from the tiny and quickly-cooling cathodes.

Please tell me you look skyward, shake your fist and yell damn you!!!!

That feeling when it is, in fact, computer ghosts.

Just had that happen to me today. Setup logging statements and reran the job, and it ran successfully.

I've had that happen, the logging statements stopped a race condition. After I removed them it came back...

Thank you for playing Wing Commander!