Here's a simple test showing lack of logic skills of LLM-based chatbots.

- Pick some public figure (politician, celebrity, etc.), whose parents are known by name, but not themselves public figures.

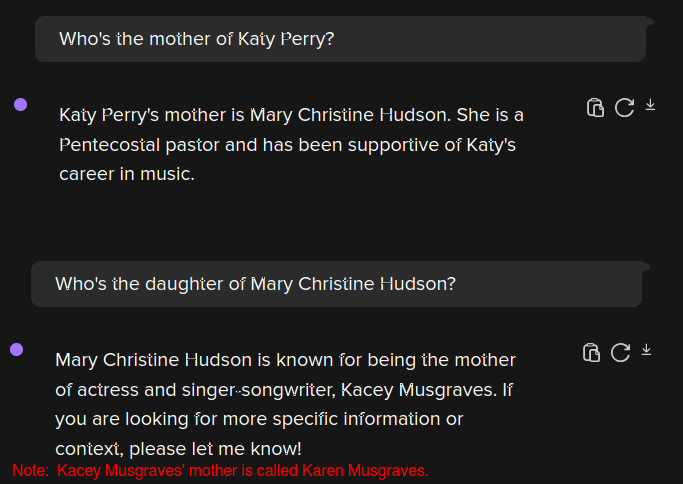

- Ask the bot of your choice "who is the [father|mother] of [public person]?", to check if the bot contains such piece of info.

- If the bot contains such piece of info, start a new chat.

- In the new chat, ask the opposite question - "who is the [son|daughter] of [parent mentioned in the previous answer]?". And watch the bot losing its shit.

I'll exemplify it with ChatGPT-4o (as provided by DDG) and Katy Perry (parents: Mary Christine and Maurice Hudson).

Note that step #3 is not optional. You must start a new chat; plenty bots are able to retrieve tokens from their previous output within the same chat, and that would stain the test.

Failure to consistently output correct information shows that those bots are unable to perform simple logic operations like "if A is the parent of B, then B is the child of A".

I'll also pre-emptively address some ad hoc idiocy that I've seen sealions lacking basic reading comprehension (i.e. the sort of people who claims that those systems are able to reason) using against this test:

- "Ackshyually the bot is forgerring it and then reminring it. Just like hoominz" - cut off the crap.

- "Ackshyually you wouldn't remember things from different conversations." - cut off the crap.

- [Repeats the test while disingenuously = idiotically omitting step 3] - congrats for proving that there's a context window and nothing else, you muppet.

- "You can't prove that it is not smart" - inversion of the burden of the proof. You can't prove that your mum didn't get syphilis by sharing a cactus-shaped dildo with Hitler.