I love to see those quotes around "AI". We need much more of that at least.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

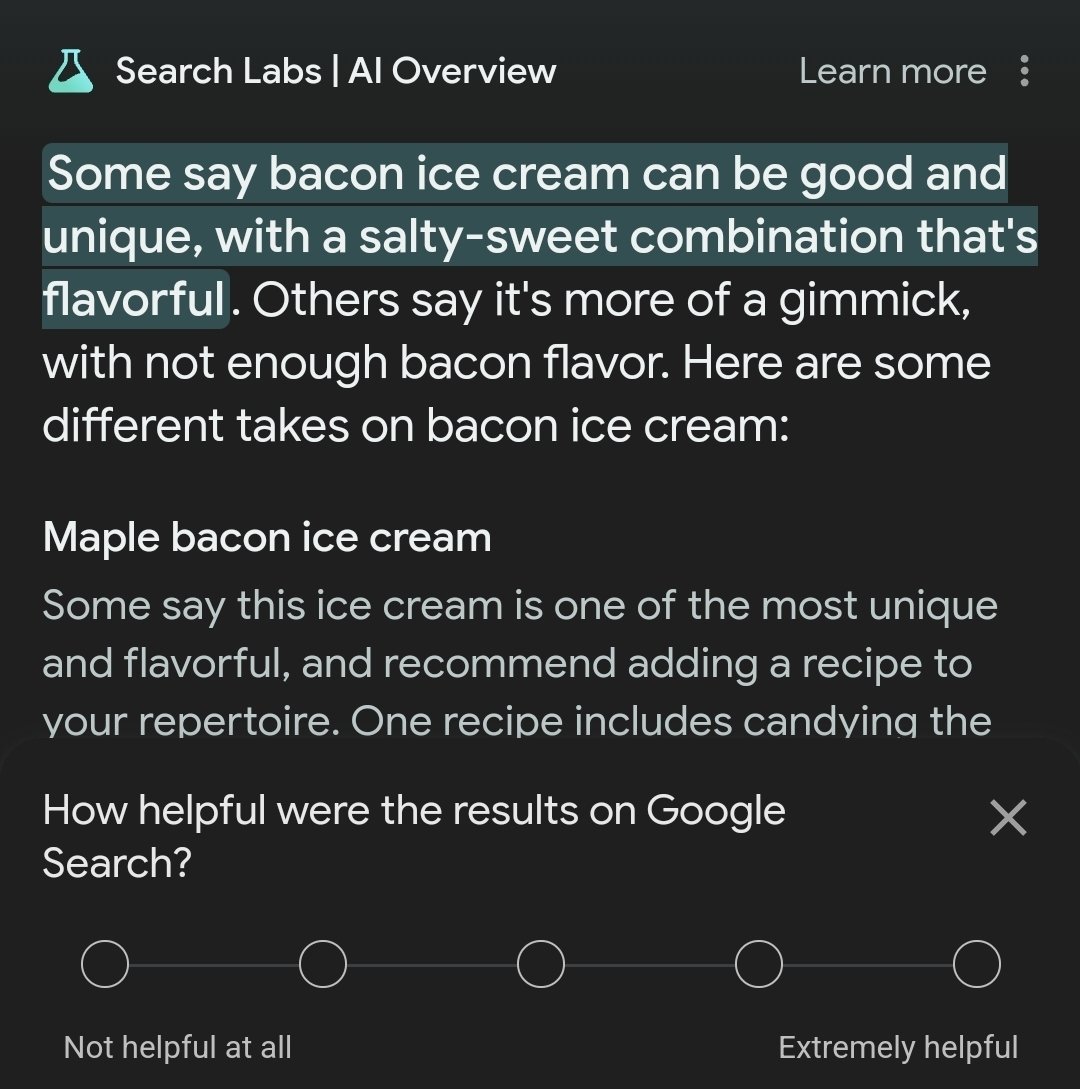

I mean, even Google AI says it's not bad, and in concept I kinda agree. I've had bacon donuts before and it was legit

theres a cute programm, call the goblin chef. if you feed it ingredients, along with amounts, and numbers of people to cook for, it spits out some neat recipes.

But it specifically warns you that it cant actually taste things. If you list ice and bacon, it'll probably combine those two into a dish. (although now i doesnt recognize "one fresh kitten" as an ingredient anymore q.q)

Bacon and ice cream go great together and I refuse to pretend they don't.

I still miss midnight snack ice cream. Potato chips covered in chocolate. Delicious

Gross, but i don't wanna kink shame you uwu

They're making it sound like putting bacon on ice cream is a bad thing.

Yeah, that's just called emergent behavior and it's a good thing

Including Putting Bacon On Ice Cream

i mean what kind of ice cream? id eat it

I'm pretty sure some of those fancy restaurants that pop up everywhere already do this. They'll put bacon on anything

The year isn't 2013 anymore. Bacon isn't a meme anymore.

I think we are about to experience a true Butlerian Jihad. Not because of the fearsome power of AI, but because of the hatred of shitty LLMs.

A buddy of mine made bacon ice cream once, but um... I think they did it wrong. It was bad. Really really bad.

Five Guys have a vanilla milkshake till bacon ... Yummmmm

A local sandwich shop used to have maple bacon ice cream sandwiches during the summer and they were epic. Your buddy definitely did something wrong.

You get out ahead of the locomotive knowing that most of the directions you go aren't going to pan out. The point is that the guy who happens to pick correctly will win big by getting out there first. Nothing wrong with making the attempt and getting it wrong, as long as you factored that risk in (as McDonalds' seems to have done given that this hasn't harmed them).

The thing most companies are missing is to design the AI experience. What happens when it fails? Are we making options available for those who want a standard experience? Do we even have an elegant feedback loop to mark when it fails? Are we accounting for different pitches and accents? How about speech impediments?

I'm a designer focusing on AI, but a lot of companies haven't even realized they need a designer for this. It's like we're the conscience of tech, and listened to about as often.

Well, what's the problem. They have bacon and they have ice cr... oh I see the error now. Just add a generic response the ice cream machine is broken and move on!

Bacon on Ice Cream works, but the Ice Cream Machine at McDonald's don't

Ai should have thought of this fact on its own

Mc Donald's already has customer self serve kiosks and mobile apps with the full menu that limit you as to which items you can add or remove.

How did they screw this up and leave things open ended for the LLM?

IE why was the LLM not referencing a list of valid options with every request and then replying with what the possible options are. This is something LLMs are actually able to do fairly well, then layer on top the EXACT same HARD constraints they already have on the kiosk and mobile app to ensure orders are valid?

You're expecting them to put thought and effort into this

The self serve terminals and apps actually work well. I prefer using them over ordering at the counter.

So ya I am surprised they rolled this out so poorly.

Because most people, including those implementing this shit, have no idea how LLMs work, or their limitations. I see it every day at my job. I have given up trying to patiently explain why they are having issues.

That wouldn't even need AI. Thats just a fancy switch statement with a pleasant voice.

That's the joke. Nearly every proposed implementation of AI isn't actually solving a real business or tech problem. It's just the next snake oil, like block chain, quantum computing, etc. There are real, valid use cases for all of those things. But most companies have no idea what they really are, how they might help, and even if they could help, what it would take to implement to see real results.

An LLM can somewhat smooth over variances in language without having to have all possible variances known just the valid options and the raw input.

- I would like a Big Mac, no lettuce, no tomato, no cheese.

- I would like a Big Mac, no vegies, hold the cheese.

- I would like a Big Mac, no vegies, no dairy

Good point. A really complicated switch statement then.

Natural language is really messy.. Could go through many variants on things. Then you get text to speech issues due to audio quality / accents.. And you need an engine that can "best guess / best match" based on what it has or ask for clarification.

Similarly you can ask for TWO of a complex thing: I would like Two.... meals, with,,, XXXX

Im just messing around man. This does sound like a good case for a basic LLM.

Those mistakes would be easily solved by something that doesn't even need to think. Just add a filter of acceptable orders, or hire a low wage human who does not give a shit about the customers special orders.

In general, AI really needs to set some boundaries. "No" is a perfectly good answer, but it doesn't ever do that, does it?

Those mistakes would be easily solved by something that doesn’t even need to think. Just add a filter of acceptable orders, or hire a low wage human who does not give a shit about the customers special orders.

That wouldn't address the bulk of the issue, only the most egregious examples of it.

For every funny output like "I asked for 1 ice cream, it's giving me 200 burgers", there's likely tens, hundreds, thousands of outputs like "I asked for 1 ice cream, it's giving 1 burger", that sound sensible but are still the same problem.

It's simply the wrong tool for the job. Using LLMs here is like hammering screws, or screwdriving nails. LLMs are a decent tool for things that you can supervision (not the case here), or where a large amount of false positives+negatives is not a big deal (not the case here either).

sure it does. it won’t tell you how to build a bomb or demonstrate explicit biases that have been fine tuned out of it. the problem is McDonald’s isn’t an AI company and probably is just using ChatGPT on the backend, and GPT doesn’t give a shit about bacon ice cream out of the box.

They really should have used a genetic algorithm to optimise their menu items for maximum ~~customer satisfaction~~ profits instead of using an LLM!

The execs do know other algorithms than LLMs exist right?

EDIT: prob replied to wrong thread

So, what happens if you order a bomb at the McD?

You get bacon on ice cream.

They can whine about unscrupulous pitchmen all they want, but at some point, unethical behavior goes so far above and beyond that it becomes impressive.

I hope that whoever convinced McDonald’s to agree to this crap back in 2019 got an award and an obscenely gigantic commission.

IBM has been doing (actually legitimate) business "AI" stuff with Watson forever.

They fucked up here because LLMs are at best part of an interface for the language processing portion and letting them anywhere near the actual business logic of setting up an order is insane, but partnering with IBM for "AI" isn't dumb at all.