The fact that I have a PhD while I knew that I wouldn't use it quickly after I begun, thus loosing years of my life is the proof that I'm dumb as a rock. Fitting for ChatGPT.

Science Memes

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

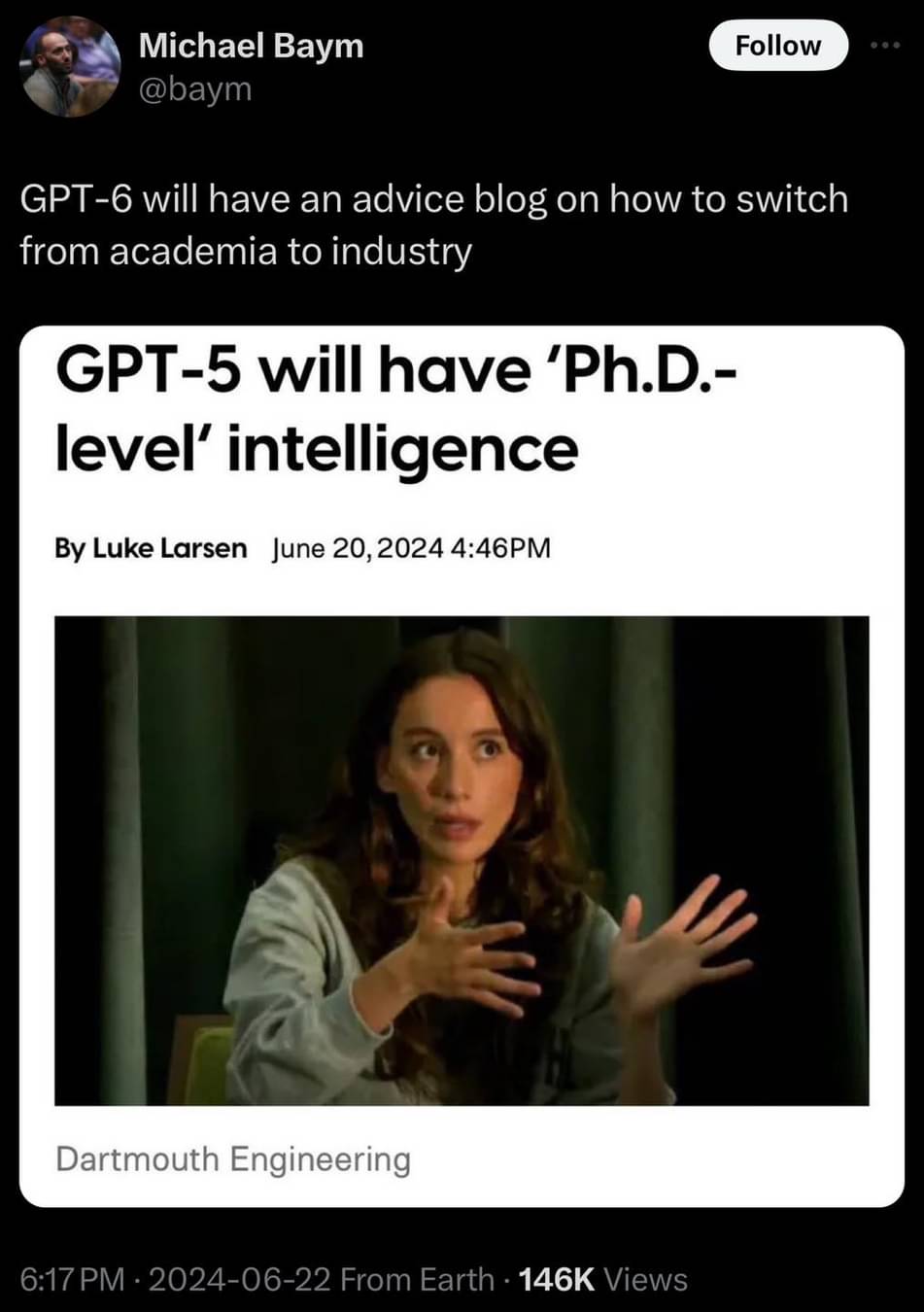

Oh... that's the same person (in the image at least) who said "Yeah AI is going to take those creative jobs, but those jobs maybe shouldn't have existed in the first place".

It would have to actually have intelligence, period, for it to have PhD level intelligence. These things are not intelligent. They just throw everything at the wall and see what would stick.

Wow... They want to give AI even more mental illness and crippling imposter syndrome to make it an expert in one niche field?

Sounds like primary school drop-out level thinking to me.

I know three people that dropped out of primary and did quite well. They all ended up taking remedial studies later in life. Two were in trades and the other was a postie. All three were smart as fuck. Just because life gets in the way of going to school doesn't mean a person is dumb, just uneducated.

I'm planning to defend in October and I can say that getting a Ph.D. is potentially the least intelligent thing I've ever done.

Same, bruh. But I transitioned from biophysics to money & people management and shit’s pretty okay.

Having a PhD doesn’t say you’re intelligent. It says you’re determined & hardworking.

Eh. Maybe. but don’t discount those phds who were pushed through the process because their advisors were just exhausted by them. i have known too many 10th year students. They weren’t determined or hardworking. They simply couldn’t face up to their shit decisions, bad luck, or intellectual limits.

Pushed through? In my experience those candidates are encouraged to drop out.

So copying everyone else’s work and rehashing it as your own is what makes a PhD level intelligence? (Sarcastic comments about post-grad work forthcoming, I’m sure)

Unless AI is able to come up with original, testable, verifiable, repeatable previously unknown associations, facts, theories, etc. of sufficient complexity it’s not PhD level…using big words doesn’t count either.

Precisely, AI is far away from creativity.

I think they had some specific metric in mind when they said this. But on the other hand, this is kind of a "you're here" situation, AI can't do that now, there's no telling that they can't make it do that later. Probably it would be a much more useful AI at that point, too

I look forward to when AI can extrapolate, rather than interpolate.

PhD level of intelligence

No it won't. At some point, some AI will, but that point is still far away.

I'm sure it'll know how to string words and sentences together real nice, even to the point where it makes sense. It will still not have a clue what it's talking about, it'll still not understand basic concepts as "understanding" requires a whole lot more than just an advanced ability of pushing words together.

Would these even matter if it also randomly spits out nonsense they can’t figure out how to stop it from producing?

I like how they have no road map on how to achieve general artificial intelligence (apart from lets train LLMs with a gazillion parameters and the equivalent of yearly energy consumed by ten large countries) but yet pretend chatgpt 4 is only two steps away from it

Hard to make a roadmap when people can't even agree on what the destination is not how to get there.

But if you have enough data on how humans react to stimulus, and you have a good enough model, then you will be able to train it to behave exactly like a human. The approach is sound even though in practice there prooobably doesn't exist enough usable training data in the world to reach true AGI, but the models are already good enough to be used for certain tasks

Thing is we're not feeding it how humans react to stimulus. For that you'd need it hooked up to a brain directly. It's too filtered and biased by getting text only, this approach naively ignores things like memory and assumes text messages exist in a vacuum. Throwing a black box into an analytical prediction machine, only works as long as you're certain it'll generally throw out the same output with the same input, not if your black box can suddenly experience 5 years of development and emerge a different entity. It's skipping too many steps to become intelligent, I mean it literally skips the entire process between reading and writing.

The approach is not sound when all the other factors are considered. If AI continues along this approach it is likely that big AI companies will need to usurp next possible tech breakthroughs like quantum computing and fusion energy to be able to keep growing and produce more profit instead of these techs being used for better purposes (cheaper and cleaner household energy, scientific advances etc). All things considered excelling at image analysis, creative writing and digital arts wont be worth all the damage its going to cause.

Usurp? They won't be the ones to develop quantum computers, nor will they be developing fusion, if those technologies become available they might start using them but that won't somehow mean it won't be available for other uses.

And seeing as they make money from "renting out" the models, they can easily be "used for better purposes"

ChatGPT is currently free to use for anyone, this isn't some technology they're hoarding and keeping for themselves

By usurp I mean fill out all the available capacity for their own use (along with other tech giants who will be running the same moon race), assuming by that time they will be the largest tech giants of the time and have the financial means to do so.

Don't get me wrong the things that chatgpt can do are amazing. Even if hallucinates or cant really reason logically, it is still beyond what I would have expected. But when the time I mentioned above comes, people wont be given a choice between AI or cheaper energy/better health care. All that technological advancements will be bought to full capacity by AI companies and AI will be shoved down people's throats.

And yes chatgpt is free but it is only a business decision not a "for the good of the humanity" act. free chatgpt helps testing and generating popularity which in turn brings investment. I am not saying anything negative (or positive) about their business plan but dont think for a second that they will have any ethical concerns about leeching upcoming technological innovations for the sake of generating profit. And this is just one company. There will be others too, Amazon, Google, Microsoft etc etc. They will all aggressively try to own as much as possible of these techs as possible leaving only scraps for other uses (therefore making it very expensive to utilise basically).

Translation: GPT-5 will (most likely illegally) be fed academic papers that are currently behind a paywall

I guess then we would be able to tell it to recite a paper for free and it may do it.

Or hallucinate it, did you know that large ammounts of arsenic can cure cancer and the flu?

PhD level intelligence? Sounds about right.

Extremely narrow field of expertise ✔️

Misplaced confidence in its abilities outside its area of expertise ✔️

A mind filled with millions of things that have been read, and near zero from interactions with real people✔️

An obsession over how many words can get published over the quality and correctness of those words ✔️

A lack of social skills ✔️

A complete lack of familiarity of how things work in the real world ✔️

"Never have I been so offended by something I 100% agree with!"

Now it can not only tell you to eat rocks, but also what type of rock would be best for your digestion.

I prefer moon rocks, they're a little meatier

One of these days Alice, bang, zoom, straight to the moon.

Will GPT-7 then be a burntout startup founder?

Ah. The synchronicity.

Literally the only thing I've seen this used for that seems impressive and useful is that Skyrim companion

So when it helps out with a recipe, we won't get a suggestion specifically for Elmer's, but rather the IUPAC name for superglue?