this post was submitted on 24 Jun 2024

795 points (97.6% liked)

Science Memes

11068 readers

2840 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Hard to make a roadmap when people can't even agree on what the destination is not how to get there.

But if you have enough data on how humans react to stimulus, and you have a good enough model, then you will be able to train it to behave exactly like a human. The approach is sound even though in practice there prooobably doesn't exist enough usable training data in the world to reach true AGI, but the models are already good enough to be used for certain tasks

Thing is we're not feeding it how humans react to stimulus. For that you'd need it hooked up to a brain directly. It's too filtered and biased by getting text only, this approach naively ignores things like memory and assumes text messages exist in a vacuum. Throwing a black box into an analytical prediction machine, only works as long as you're certain it'll generally throw out the same output with the same input, not if your black box can suddenly experience 5 years of development and emerge a different entity. It's skipping too many steps to become intelligent, I mean it literally skips the entire process between reading and writing.

Yeah that was a hypothetical, if you had thoae things you would be able to create a true AGI (or what i would consider a true AGI at least)

Text is basically just a proxy, but to become proficient at predicting text you do need to develop many of the cognitive abilities that we associate with intelligence, and it's also the only type of data we have literal terrabytes of laying around, so it's the best we've got 🤷♂️

Regarding memory, the human mind can be viewed as taking in stimuli, associating that with existing memories, condensing that into some high level representation, then storing that, a llm could, with a long enough context window, look back at past input and output and use that information to influence it's current output, to mostly the same effect.

What do you mean throwing a black box into an analytical prediction machine? And what do you mean 5 years of development?

The black box is the human that reads and outputs text and the analytical prediction machine is the AI. 5 years of development is the human living their life before retuning to continue writing. It is an extreme example, but I'm just tyring to point out that the context of what a person might write can change drastically between individual messages because anything can happened in between, and thus the data is fundamentally flawed for training intelligence, as that step is fully missing, the thought process.

As to why I called the AI an analytical prediction machine, that's because that's essentially what it does. It has analyzed an unholy amount of random text from the internet, meaning conversations/blogs/books and so on, to predict what could follow the text you gave it. It's why prompt injection is so hard to combat and why if you give it a popular riddle and change it slightly like "with a boat, how can a man and goat get across the river", it'll fail spectacularly trying to shove in the original answer somehow. I'd say that's proof it didn't learn to understand (cognition), because it can't use logic to reason about a deviation from the dataset.

As for memory, we can kind of simulate it with text, but it's not perfect. If the AI doesn't write it down, it didn't happen and thus any thoughts, feelings or mental analysis stops existing upon each generation. The only way it could possibly develop intelligence, is if we made it needlessly ramble and describe everything like a very bad book.

And thus to reach the beginning of your comment, I don't belive it's necessary to posses any cognitive abilities to generate text and in turn I don't see it as evidence of us getting any closer to AGI.

Prompt:

Answer:

Are there biases due to the training data? Yes

Does that mean it is totally incapable of reason? No why would it?

And the models aren't trying to act like a specific person, but humans in general, so variations in writing styles in the data is quite irrelevant, as we've already seen it'll usually adopt the writing style of the prompt, much like a writer will usually stick to their writing style throughout a book.

Memories are not required for intelligence, and letting a model ramble to itself will just cause the entropy of the output to increase until it's spewing gibberish, akin fo a human locked in solitary for long enough.

Let's do the riddle I suggested, because we need something popular in the dataset, but present it with a deviation that makes it stupidly simple yet is unlikely to exist.

Prompt:

Answer:

A normal human wouldn't be fooled by this and say that they can just go across and maybe ask where the riddle is. They'd be likely confused or expect more. The AI doesn't because it completely lacks the ability to reason. At least it ends up solved, that's probably the best response I got when trying to make this point. Let's continue.

Follow up prompt:

Answer:

Final prompt:

Final answer:

I think that's quite enough, it's starting to ramble like you said it would (tho much earlier than expected) and unlike the first solution, it doesn't even end up solved anymore xD I'd argue this is a scenario that should be absolutely trivial and yet the AI is trying to assert information that I didn't present and continues to fail to apply logic correctly. The only time it knows how to reason is when someone in its dataset already spelled out the reasoning to a certain question. If the logic doesn't exits in the dataset, it has great difficulty making heads or tails of it.

And yes, I'd argue memories are indeed absolutely vital to inteligence. If we want cognition, aka the process of acquiring knowledge and understanding, we need it to remember. And if it immediately loses that information or it erodes so quickly, it's essentially worthless.

Tried the same prompt:

Asking questions because you know the dataset is biased towards a particular solution isn't showing the fault in the syatem, much like asking a human a trick question isn't proving humans are stupid. If you want to test the logical reasoning you should try questions it is unlikely to have ever heard before, where it needs to actually reason on its own to come to the answer.

And i guess people with anterograde amnesia cannot be intelligent, are incapable of cognition and are worthless, since they can't form new memories

It's not much of a trick question, if it's absolutely trivial. It's cherry picked to show that the AI tries to associate things based on what they look like, not based on the logic and meaning behind them. If you gave the same prompt to a human, they likely wouldn't even think of the original riddle.

Even in your example it starts off by doing absolute nonsense and upon you correcting it by spelling out the result, it finally manages, but still presents it in the format of the original riddle.

You can notice, in my example I intentionally avoid telling it what to do, rather just question the bullshit it made, and instead of thinking "I did something wrong, let's learn", it just spits out more garbage with absolute confidence. It doesn't reason. Like just try regenerating the last answer, but rather ask it why it sent the man back, don't do any of the work for it, treat it like a child you're trying to teach something, not a machine you're guiding towards the correct result.

And yes, people with memory issues immediately suffer on the inteligence side, their lives a greatly impacted by it and it rarely ends well for them. And no, they are not worthless, I never said that they or AI is worthless, just that "machine learning" in its current state (as in how the technology works), doesn't get us any closer to AGI. Just like a person with severe memory loss wouldn't be able to do the kind of work we'd expect from an AGI.

The approach is not sound when all the other factors are considered. If AI continues along this approach it is likely that big AI companies will need to usurp next possible tech breakthroughs like quantum computing and fusion energy to be able to keep growing and produce more profit instead of these techs being used for better purposes (cheaper and cleaner household energy, scientific advances etc). All things considered excelling at image analysis, creative writing and digital arts wont be worth all the damage its going to cause.

Usurp? They won't be the ones to develop quantum computers, nor will they be developing fusion, if those technologies become available they might start using them but that won't somehow mean it won't be available for other uses.

And seeing as they make money from "renting out" the models, they can easily be "used for better purposes"

ChatGPT is currently free to use for anyone, this isn't some technology they're hoarding and keeping for themselves

So.many people have conspiracy theories about how chat gpt is stealing things and whatever, people in this threat crowing that it's immoral if they teach it with paywalled journal articles - though I bet I can guess who their favorite reddit founder is...

I use gpt to help coding my open source project and it's fantastic, everyone else I know that contributes to floss is doing the same - it's not magic but for a lot of tasks it can cut 90% of the time out especially prototyping and testing. I've been able to add more and better functionality thaks to a free service, I think that's a great thing.

What I'm really looking forward to is CAD getting generative tools, refining designs into their most efficient forms and calculating strengths would be fantastic for the ecosystem of freely shared designs, text2printable would be fantastic 'design a bit to fix this problem' could shift a huge amount of production back to local small industry or bring it into the home.

The positive possibilities of people having access to these technologies is huge, all the groups that currently can't compete with the big corporations suddenly have a huge wall pulled down for them - being able to make custom software tools for niche tasks is fantastic for small charities or community groups, small industry, eco projects, etc.

It'll take time for people to learn how to use the tools effectively just like when computers were new but as it becomes more widely understood I think we'll see a lot of positive innovation which it enables.

I am not denying the positive use cases being employed now and possibly being employed in the future. I am not opposing the use/development of AI tools now and in in future too.

However the huge negative possibilities are very real too and is/will be effecting humanity. I am against the course big AI companies seem to be taking and against the possible future allocation of most of major tech innovations to their cause.

It is of course very hard to predict how the positives and negatives will balance out but I think big tech companies don't have any interest in balancing this out. They seem to be very short sighted for anything other than direct profits. I think they will take the easiest way to more profit/AI dominance which is a short term investment. So I am not very optimistic on how it will pan out. Maybe I am wrong and like computers it will open up a whole new world of possibilities. But the landscape then and landscape now is also quite different in terms of how big tech companies and richest people act.

Your position is: "I like AI because it makes my job/hobbies easier. Also my coworkers do the same, because they are in almost the same position as me. I understand why people don't like AI, they must just be reading fake-news about it and believing it. Why can't they see that AI is a benefit for society?"

Not once did you mention any of the reasons people are opposed to AI, just that you hope one day they will get over it and learn how to use the tools to bring down big business.

I like how you imply that only programmers at large corporations know how to build things. If they would just use the AI tools I bet you could hire in a bunch more developers for cheap to boost productivity!

Here's a clue: no one gives a shit about making it slightly easier to code, make pictures, or write emails. The costs for maintaining the system and developing it are absurd when we have actual problems affecting people right now. This is all a waste of time, and is Americas latest scam. Before that was crypto currency, medical investment fraud, and a hundred other get rich quick/save the world schemes designed to do one thing: generate profit for a small group of people so they can ride off into the sunset, their American dream complete.

You're absolutely delusional if you think no one wants code done quicker and easier, and that's not to .mention the endless other things it makes possible like giving people access to vital services like heathcare in their native language, etc, etc.

These are things that are going to totally change the world for the better, removing power from corporations and giving to to people. You may not understand that because you're not involved in actually doing anything productive but it's a reality everyone can see.

Yes things have been scams there are also things that have dramatically changed the world for the better, heathacare and education in remote and impoverished areas all entirely depends on the mobile networks. They're also now absurdly cheap, the cost and effort of sending text used to be prohibitive but now you can video chat with your whole family all day every day at no extra thanks to a technology which became ubiquitous.

As for your very wise solution of 'just hire more developers' yes that is why corporations are able to capture and control markets, a world where only the rich have the power to make things and compete is a horrible late capitalist hell - stop defending capitalism just because you're used to it, yes you have an affluent life thanks to the suffering of others which is great for you but I don't want to live like that, I don't want to require children to slave in cocoa and coffee plantations or starving mothers to work 16 hour days in fast fashion garment factories when we have the ability to free those people and give them good lives by harnessing ai to help automate boring and laborious tasks.

Capitalism is not as good as you seem to think it is, learn about the reality of capitalism beyond your glib bubble and you'll realize that ai tools are vital for a fair world and a world at peace.

By usurp I mean fill out all the available capacity for their own use (along with other tech giants who will be running the same moon race), assuming by that time they will be the largest tech giants of the time and have the financial means to do so.

Don't get me wrong the things that chatgpt can do are amazing. Even if hallucinates or cant really reason logically, it is still beyond what I would have expected. But when the time I mentioned above comes, people wont be given a choice between AI or cheaper energy/better health care. All that technological advancements will be bought to full capacity by AI companies and AI will be shoved down people's throats.

And yes chatgpt is free but it is only a business decision not a "for the good of the humanity" act. free chatgpt helps testing and generating popularity which in turn brings investment. I am not saying anything negative (or positive) about their business plan but dont think for a second that they will have any ethical concerns about leeching upcoming technological innovations for the sake of generating profit. And this is just one company. There will be others too, Amazon, Google, Microsoft etc etc. They will all aggressively try to own as much as possible of these techs as possible leaving only scraps for other uses (therefore making it very expensive to utilise basically).

It's ai and cheaper healthcare or no ai and spiraling costs to healthcare - especially with falling birthrate putting a burden on the system.

AI healthcare tools are already making it easier to provide healthcare, I'm in the uk so it's different math who benefits but tools for early detection of tumors not only cuts costs but increases survivability too, and its only one of many similar tech already in use.

Akinator style triage could save huge sums and many lives, especially in underserved areas - as could rapid first aid advice, we have a service for non-emergency medical advice, they basically tell you if you need to go to a&e, the doctor, or wait it out - it's helped allocate resources and save lives in cases where people would have waited out something that needs urgent care. Having your phone able to respond to 'my arm feels funny' by asking a series of questions that determines the medically correct response could be a real life saver 'alexia I've fallen and can't get up' has already save people's elderly parents lives 'clippy why is there blood in my poop' or 'Hey Google, does this mole look weird' will save even more.

Medical admin is a huge overhead, having a 24/7 running infinite instances of medically trained clerical staff would be a game changer - being able to call and say 'this is the new situation' and get appointments changed or processes started would be huge.

Further down the line we're looking at being able to get basic tests done without needing a trained doctor or nurse to do it, decreasing their workload will allow them to provide better care where it's needed - a machine able to take blood and run tests on it then update the GP with results as soon as they're done would cut costs and wasted time - especially if the system is trained with various sensors to perform healthchecks of the patient while taking blood, it's a complex problem to spot things out of the ordinary for a patient but one ai could be much better at than humans, especially rover worked humans.

As for them owning everything that can only happen if the anti ai people continue to support stronger copyright protections against training, if we agreed that training ai is a common good and information should be fair use over copyright then any government, NGO, charity, or open source crazy could train their own - It's like electricity, Edison made huge progress and cornered the market but once the principles are understood anyone can use them so as tech increased it became increasingly easy for anyone to fabricate a thermopile or turbine so there isn't a monopoly on electricity, there are companies who have local monopolies by cornering markets but anyone can make an off grid system with cheap bits from eBay.

For instance, I would be completely fine with this if they said "We will train it on a very large database of articles and finding relevant scientific information will be easier than before". But no they have to hype it up with nonsense expectations so they can generate short term profits for their fucking shareholders. This will either come at the cost of the next AI winter or senseless allocation of major resources to a model of AI that is not sustainable in the long run.

Well get your news about it from scientific papers and experts instead of tabloids and advertisements.

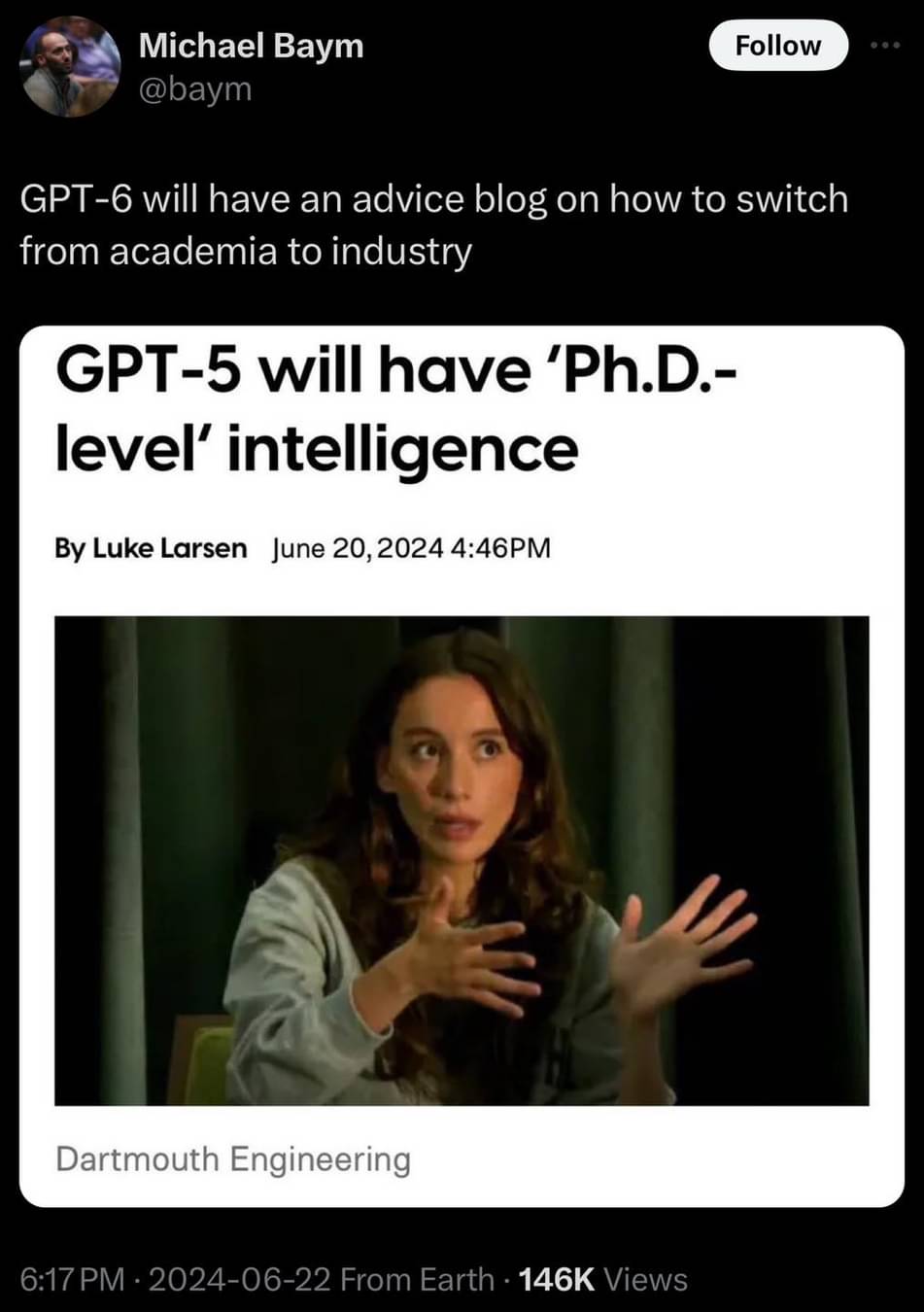

I mean the person who said this is the CTO of OpenAI and an engineer working in this project. I would imagine she could be considered an expert.

I feel like all the useful stuff you have listed here is more like advanced machine learning and different than the AI that is being advertised to the public and being mostly invested in. These are mostly stuff we can already do relatively easily with the available AI (i.e highly sophisticated regression) for relatively low compute power and low energy requirements (not counting more outlier stuff like alpha fold which still requires quite a lot of compute power). It is not related to why the AI companies will need to own most of the major computational and energy innovations in the future.

It is the image/text generative part of AI that looks more sexy to the public and that is therefore mostly being hyped/advertised/invested on by big AI companies. It is also this generative part of AI that will require much bigger computation and energy innovations to keep delivering significantly more than it can now. The situation is very akin to the moon race. It feels like trying to develop the AI on this "brute force" course will deliver relatively low benefits for the cost it will incur.

Thats all well and good but here in America thats just a long list of stuff I can't afford, and won't be used to drive down costs. If it will for you, then I'm happy you live in a place that gives a shit about its populations health.

I know there will be people who essentially do the reverse of profiteering and will take advantage of AI for genuinely beneficial reasons, although even in those cases a lot of the time profit is the motive. Unfortunately the American for profit system has drawn in some awful people with bad motives.

If, right now, the two largest AI companies were healthcare nonprofits, I dont think people would be nearly as upset at the massive waste of energy, money, and life current AI is.

Not sure i'm fully understanding your point, are you saying that the large AI companies will create AIs that will create technologies beyond what everyone else is capable of, thus outcompeting everyone, effectively monopolizing every market and from there basically become the umbrella corporation?

I would be very impressed if anyone managed to make an AI capable of innovation to that degree, but sure, in that case we would have to fall back on something like government oversight and regulations to keep the companies in check i suppose

No, other people will generate technologies like quantum computing, fusion energy. Big AI companies will try to own (by buying them out) as much of these as possible because the current model of AI they are using requires these techs to be able to deliver anything significantly better than what they have now. So these tech advancements will basically be owned by AI companies leaving very little room for other uses.

For these AI companies trying to go toward general AI is risky, as you said above it is not even well defined. On the other hand scaling up their models massively is a well defined goal which however requires major compute and energy innovations like those mentioned above. If these ever happen during like the next ten years or so big tech involved in AI will jump on these and buy as much of it as possible for themselves. And the rest will be mostly bought by governments for military and security applications leaving very little for other public betterment uses.

What if i say big fusion companies will take over the ai market since they have the energy to train better models, seems exactly as likely.

Remember when GPUs stopped being available because openAI bought nvidia and AMD and took all the gpus for themselves?

No? Weird, since gpus are needed for them to be able to deliver anything significantly better than what we have now 🤔

I guess the end result would be the same. But at large the economic system and human nature would be to blame which is actually what I am trying to blame here too, not AI but people in power who abuse AI and steer it towards hype and profit

General AI is a good goal for them because its poorly defined not in spite of it.

Grifts usually do have vague and shifting goal lines. See star citizen and the parallels between its supporters/detractors vs the same groups with AI: essentially if you personally enjoy/benefit from the system, you will overlook the negatives.

People are a selfish bunch and once they get a fancy new tool and receive praise for it, they will resist anyone telling them otherwise so they can keep their new tool, and the status they think it gives them (i.e. expert programmer, person who writes elegant emails, person who can create moving art, etc.)

AI is a magic trick to me, everyone thinks they see one thing, but really if you showed them how it works they would say, "well that's not real magic after all, is it?"

Idiots thinking a new thing is magic that will solve all the worlds problems doesn't mean the thing doesn't have merit. Someone calling themselves an exper carpenter because they have a nailgun doesn't make nailguns any less useful.

If you see a person doing a data entry job, do you walk over to them, crack their head open and say "aww man, it's not magic, it's just a blob of fat capable of reading and understanding a document, and to then plot the values into a spreadsheet"?

It's not magic, it's not a super intelligence that will solve everything, it's the first tool we've been able to make that can be told what to do in human language, and able to then perform tasks with a degree of intelligence and critical thinking, something that normally would require either a human or potentially years of development to automate programmatically. That alone makes it very useful

Why isnt it being sold as just a new coding language then?

Because it isn't

What other practical use for it is there?