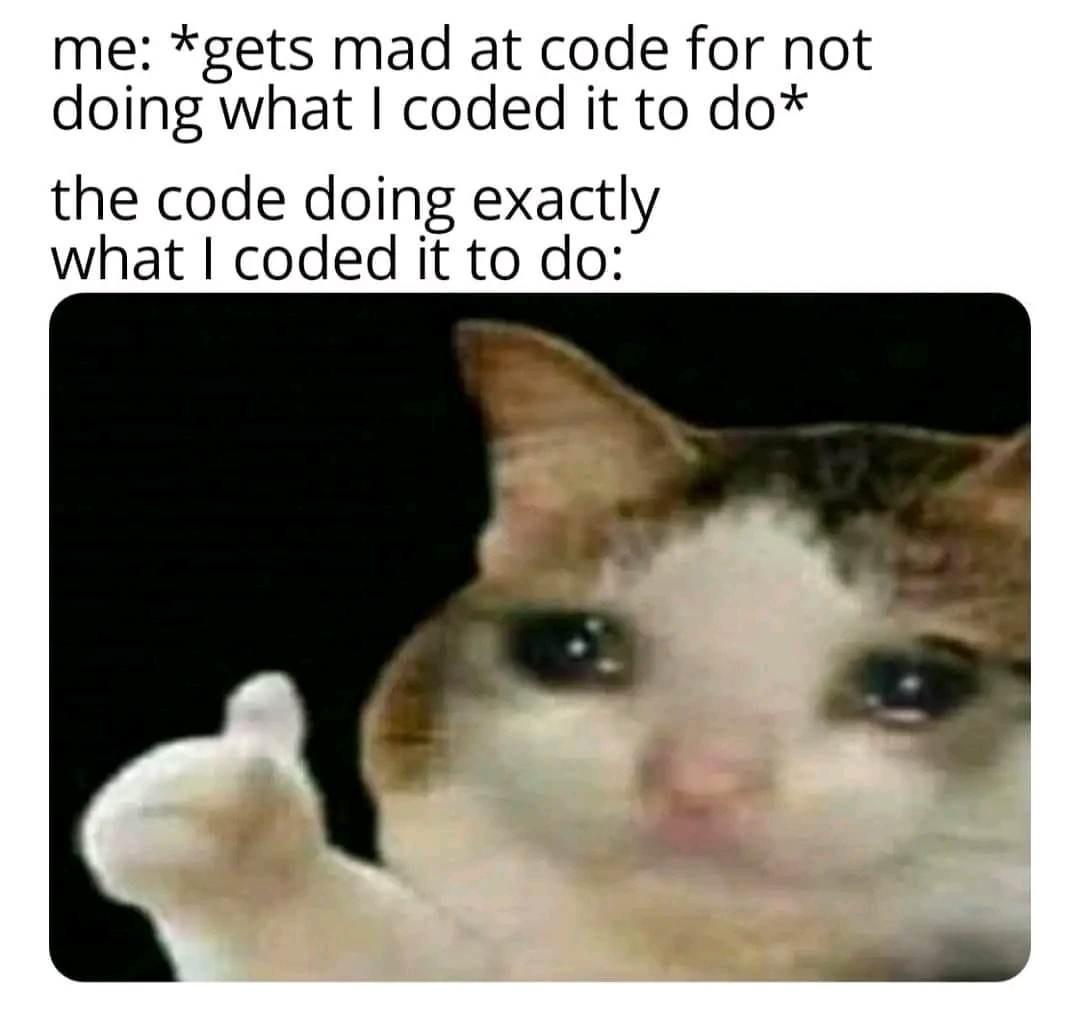

Me looking at my unit tests failing one after another.

Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

Yeah but have you ever coded shaders? That shit’s magic sometimes. Also a pain to debug, you have to look at colors or sometimes millions of numbers trough a frame analyzer to see what you did wrong. Can’t program messages to a log.

Can't you run it on an emulator for debugging, Valgrind-style?

Computers don't do what you want, they do what you tell them to do.

Exactly, they're passive aggressive af

I wouldn't call them passive, they do too much work. More like aggressively submissive.

Maliciously compliant perhaps

They do what you tell them, but only exactly what and how you tell them. If you leave any uncertainty chances are it will fuck up the task

Stupid code! Oh, looks like this was my fault again....this time

Must've been chatGPT's fault

My experience is that: If you don't know exactly what code the AI should output, it's just stack overflow with extra steps.

Currently I'm using a 7B model, so that could be why?