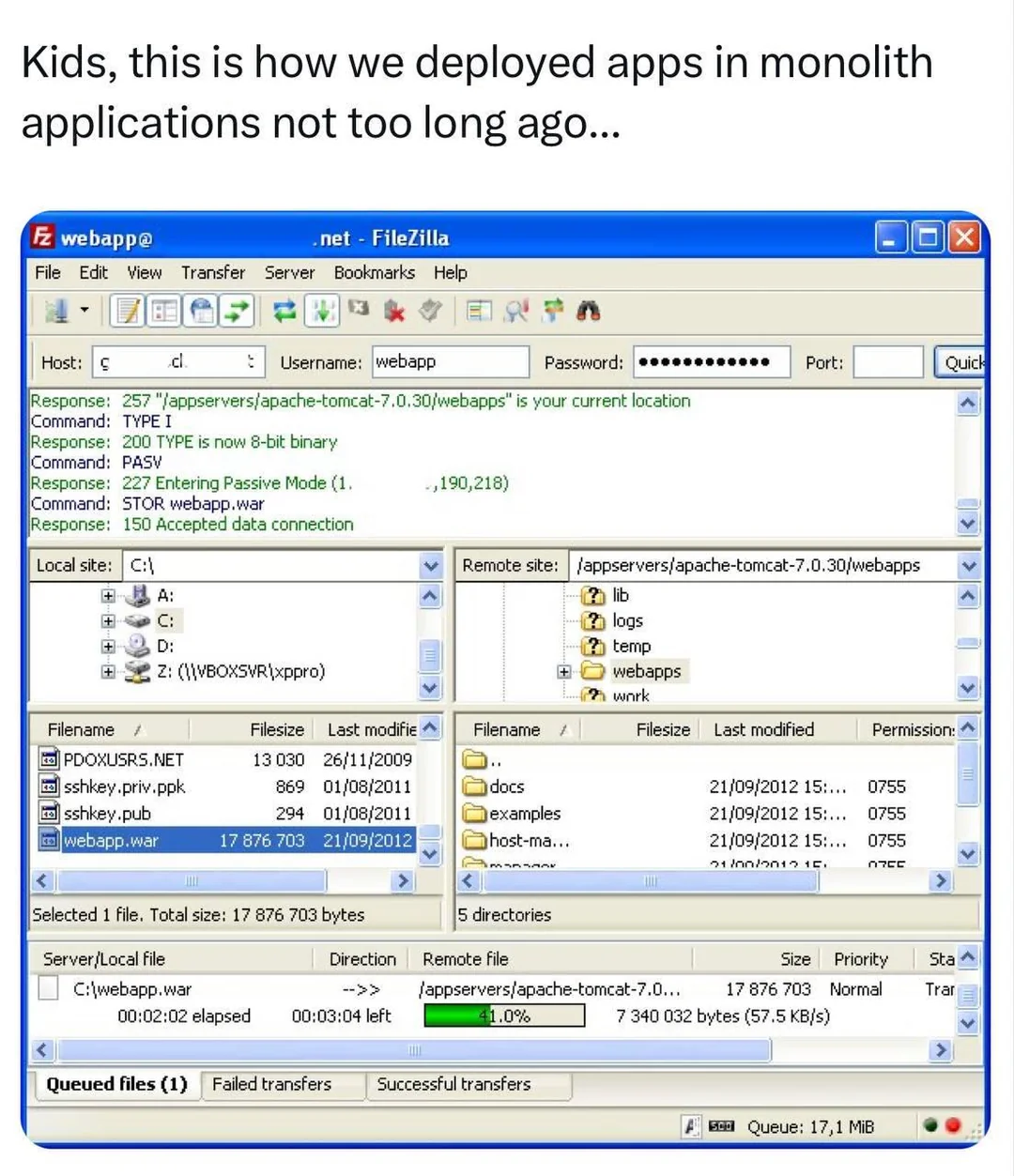

I used CuteFTP, but I am a gentleman

Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

I remember this. I also remember using scp instead. And ftp, if I go back far enough. rsync is still my friend though zfs has mostly replaced it now.

I remember joining the industry and switching our company over to full Continuous Integration and Deployment. Instead of uploading DLL's directly to prod via FTP, we could verify each build, deploy to each environment, run some service tests to see if pages were loading, all the way up to prod - with rollback. I showed my manager, and he shrugged. He didn't see the benefit of this happening when, in his eyes, all he needed to do was drag and drop, and load the page to make sure all is fine.

Unsurprisingly, I found out that this is how he builds websites to this day...

I mean, a lot of docker files out there with COPY . .

True, but building the image is not the same as deploying to production.

Fair point

This is from before my times, but... Deploying an app by uploading a pre built bundle? If it's a fully self-contained package, that seems good to me, perhaps better than many websites today...

That's one nice thing about Java. You can bundle the entire app in one .jar or .war file (a .war is essentially the same as a .jar but it's designed to run within a Servlet container like Tomcat).

PHP also became popular in the PHP 4.x era because it had a large standard library (you could easily create a PHP site with no third-party libraries), and deployment was simply copying the files to the server. No build step needed. Classic ASP was popular before it, and also had no build step. but it had a very small standard library and relied heavily on COM components which had to be manually installed on the server.

PHP is mostly the same today, but these days it's JIT compiled so it's faster than the PHP of the past, which was interpreted.

Somehow I miss those days. Now you need weeks of training to understand the black magic behind all the build/deployment stuff in whatever cloud provider your company decided to use…

We got our own platform based on kubernetes and cncf stuff and we don't have to care anymore about the metal underneath. AWS? OTC? Azure? Thats just a target parameter, platform does the rest. It's great.

Naa, once you figure out one the rest click usually.

People don't use FileZilla for server management anymore? I feel like I've missed that memo.

I suppose in the days of 'Cloud Hosting' a lot of people (hopefully) don't just randomly upload new files (manually) on a server anymore.

Even if you still just use normal servers that behave like this, a better practice would be to have a build server that creates builds, like whenever you check code into the Main branch, it'll create a deploy for the server, and you deploy it from there - instead of compiling locally, opening filezilla and doing an upload.

If you're using 'Cloud Hosting' - for example AWS - If you use VMs or bare metal - you'd maybe create Elastic Beanstalk images and upload a new Application or Machine Image as a new version, and deploy that in a more managed way. Or if you're using Docker, you just upload a new Docker image into a Docker registry and deploy those.

For some of my sites, I still build on my PC and rsync the build directory across. I've been meaning to set up Gitlab or something similar and configure automated deployments.

Yea, I wasn't saying it's always bad in every scenario - but we used to have this kinda deployment in a professional company. It's pretty bad if this is still how you're doing it like this in an enterprise scenarios.

But for a personal project, it's alrightish. But yea, there are easier setups. For example configuring an automated deployed from Github/Gitlab. You can check out other peoples' deployment config, since all that stuff is part of the repos, in the .github folder. So probably all you have to do is find a project that's similar to yours, like "static file upload for an sftp" - and copypaste the script to your own repo.

(for example: a script that publishes a website to github pages)

This is what I do because my sites aren't complicated enough to warrant a build system. Personally I think most websites out there are over-engineered. Example: a Discord friend made a React site that displays stats from a gaming server. It looks nice, but you literally can't hyperlink to any of the data, it can only be loaded dynamically and only looks coherent on a phone in portrait mode. There are a lot of people following trends (some good trends) but without really thinking about why.

I'm starting to like the htmx model a lot. Server-rendered app that uses HTML attributes to configure the dynamic bits (e.g. which URL to hit and which DOM element to insert the response into). Don't have to write much JS (or any in some cases).

you literally can't hyperlink to any of the data

I thought most React-powered frameworks use a URL router out-of-the-box these days? The developer does need to have a rough idea what they're doing, though.

this app uses java swing?

okay, but why did you use a password when the ssh/sftp key is right next to the files