welcome post is pinned on some remote instances without obvious reason https://awful.systems/post/61

TechTakes

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

yeah that's cos it's still pinned ;-) a year is probably enough I'll unpin it now

I'm not sure if they're a decent person or not, but I liked this sneer on the orange site, in the context of this recent rant (previously, on Awful), describing a hypothetical executive-humbling mechanism. I agree with them; I'd read that fanfiction too.

Tom Murphy VII's new LLM typesetting system, as submitted to SIGBOVIK. I watched this 20 minute video all through, and given my usual time to kill for video is 30 seconds you should take that as the recommendation it is.

The scales have fallen from my eyes

How could've blindness struck me so

LLM's for sure bring more than lies

They can conjure more than mere woe

All of us now, may we heed the sign

Of all text that will come to alignWatched this without sound, as I had opened the tab and wasn't paying attention, but did you really just suggest a video where they are drinking out of a clearly empty cup? I closed it in disgust.

oww god owwww official MS experimental LLM feature for Excel ow ow owwwwwwwww

From the creators of “we turned your date formatting into a math equation, and now many of your research papers have substantially faulty data”, we bring you…

I like this video: https://www.youtube.com/watch?v=zVQKDKKnb2Y

I kinda want to replay subnautica now.

I like this video very much - thanks for sharing!

NYT opinion piece title: Effective Altruism Is Flawed. But What’s the Alternative? (archive.org)

lmao, what alternatives could possibly exist? have you thought about it, like, at all? no? oh...

(also, pet peeve, maybe bordering on pedantry, but why would you even frame this as singular alternative? The alternative doesn't exist, but there are actually many alternatives that have fewer flaws).

You don’t hear so much about effective altruism now that one of its most famous exponents, Sam Bankman-Fried, was found guilty of stealing $8 billion from customers of his cryptocurrency exchange.

Lucky souls haven't found sneerclub yet.

But if you read this newsletter, you might be the kind of person who can’t help but be intrigued by effective altruism. (I am!) Its stated goal is wonderfully rational in a way that appeals to the economist in each of us...

rational_economist.webp

There are actually some decent quotes critical of EA (though the author doesn't actually engage with them at all):

The problem is that “E.A. grew up in an environment that doesn’t have much feedback from reality,” Wenar told me.

Wenar referred me to Kate Barron-Alicante, another skeptic, who runs Capital J Collective, a consultancy on social-change financial strategies, and used to work for Oxfam, the anti-poverty charity, and also has a background in wealth management. She said effective altruism strikes her as “neo-colonial” in the sense that it puts the donors squarely in charge, with recipients required to report to them frequently on the metrics they demand. She said E.A. donors don’t reflect on how the way they made their fortunes in the first place might contribute to the problems they observe.

Eating live fire ants is flawed. But what's the alternative?

But if you read this newsletter, you might be the kind of person who can’t help but be intrigued by effective altruism. (I am!) Its stated goal is wonderfully rational in a way that appeals to the economist in each of us…

Funny how the wannabe LW Rationalists don't seem read that much Rationalism, as Scott has already mentioned that our views on economists (that they are all looking for the Rational Economic Human Unit) is not up to date and not how economists think anymore. (So in a way it is a false stereotype of economists, wasn't there something about how Rationalists shouldn't fall for these things? ;) ).

Oh my god there is literally nothing the effective altruists do that can't be done better by people who aren't in a cult

didn't the comments say "tax the hell out of them" before they were closed

THIS IS NOT A DRILL. I HAVE EVIDENCE YANN IS ENGAGING IN ACASUAL TRADE WITH THE ROBO GOD.

Found in the wilds^

Giganto brain AI safety 'scientist'

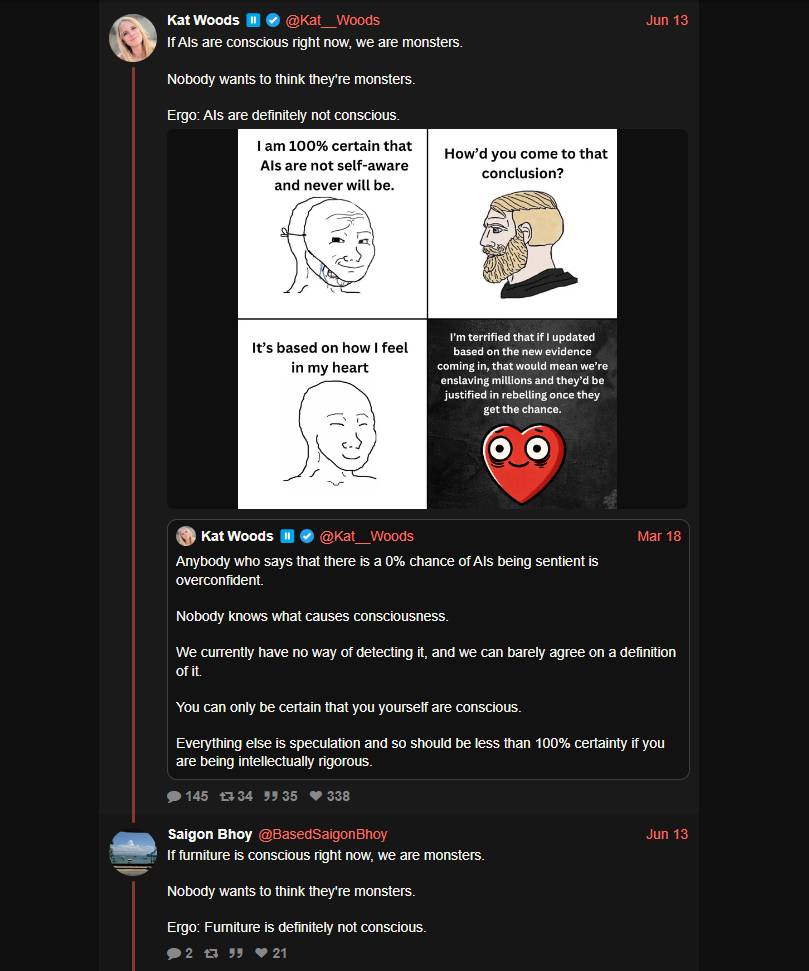

If AIs are conscious right now, we are monsters. Nobody wants to think they're monsters. Ergo: AIs are definitely not conscious.

Internet rando:

If furniture is conscious right now, we are monsters. Nobody wants to think they're monsters. Ergo: Furniture is definitely not conscious.

Is it time for EAs to start worrying about Neopets welfare?

literally roko's basilisk

https://xcancel.com/AISafetyMemes/status/1802894899022533034#m

The same pundits have been saying "deep learning is hitting a wall" for a DECADE. Why do they have ANY credibility left? Wrong, wrong, wrong. Year after year after year. Like all professional pundits, they pound their fist on the table and confidently declare AGI IS DEFINITELY FAR OFF and people breathe a sigh of relief. Because to admit that AGI might be soon is SCARY. Or it should be, because it represents MASSIVE uncertainty. AGI is our final invention. You have to acknowledge the world as we know it will end, for better or worse. Your 20 year plans up in smoke. Learning a language for no reason. Preparing for a career that won't exist. Raising kids who might just... suddenly die. Because we invited aliens with superior technology we couldn't control. Remember, many hopium addicts are just hoping that we become PETS. They point to Ian Banks' Culture series as a good outcome... where, again, HUMANS ARE PETS. THIS IS THEIR GOOD OUTCOME. What's funny, too, is that noted skeptics like Gary Marcus still think there's a 35% chance of AGI in the next 12 years - that is still HIGH! (Side note: many skeptics are butthurt they wasted their career on the wrong ML paradigm.) Nobody wants to stare in the face the fact that 1) the average AI scientist thinks there is a 1 in 6 chance we're all about to die, or that 2) most AGI company insiders now think AGI is 2-5 years away. It is insane that this isn't the only thing on the news right now. So... we stay in our hopium dens, nitpicking The Latest Thing AI Still Can't Do, missing forests from trees, underreacting to the clear-as-day exponential. Most insiders agree: the alien ships are now visible in the sky, and we don't know if they're going to cure cancer or exterminate us. Be brave. Stare AGI in the face.

This post almost made me crash my self-driving car.

not a cult btw

Ah yes, AGI companies, the things that definitely exist

Maybe AI could do Better than we've done... We'll make great pets

humans are pets

Actually not what is happening in the books. I get where they are coming form but this requires redefining the word pet in such a way it is a useless word.

The Culture series really breaks the brains of people who can only think in hierarchies.

It's mad that we have an actual existential crisis in climate change (temperature records broken across the world this year) but these cunts are driving themselves into a frenzy over something that is nowhere near as pressing or dangerous. Oh, people dying of heatstroke isn't as glamorous? Fuck off

If you've been around the block like I have, you've seen reports about people joining cults to await spaceships, people preaching that the world is about to end &c. It's a staple trope in old New Yorker cartoons, where a bearded dude walks around with a billboard saying "The End is nigh".

The tech world is growing up, and a new internet-native generation has taken over. But everyone is still human, and the same pattern-matching that leads a 19th century Christian to discern when the world is going to end by reading Revelation will lead a 25 year old tech bro steeped in "rationalism" to decide that spicy autocomplete is the first stage of The End of the Human Race. The only difference is the inputs.

Sufficiently advanced prompts are indistinguishable from prayer

if i only could, i'd prompt-engineer God,

Seriously, could someone gift this dude a subscription to spicyautocompletegirlfriends.ai so he can finally cum?

One thing that's crazy: it's not just skeptics, virtually EVERYONE in AI has a terrible track record - and all in the same OPPOSITE direction from usual! In every other industry, due to the Planning Fallacy etc, people predict things will take 2 years, but they actually take 10 years. In AI, people predict 10 years, then it happens in 2!

ai_quotes_from_1965.txt

Remember, many hopium addicts are just hoping that we become PETS. They point to Ian Banks’ Culture series as a good outcome… where, again, HUMANS ARE PETS. THIS IS THEIR GOOD OUTCOME.

I am once again begging these e/acc fucking idiots to actually read and engage with the sci-fi books they keep citing

but who am I kidding? the only way you come up with a take as stupid as “humans are pets in the Culture” is if your only exposure to the books is having GPT summarize them