I've yet to see a need for "AI integration ✨" in to the desktop experience. Copilot, LLM chat bots, TTS, OCR, and translation using machine learning are all interesting but I don't think OS integration is beneficial.

Linux

From Wikipedia, the free encyclopedia

Linux is a family of open source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991 by Linus Torvalds. Linux is typically packaged in a Linux distribution (or distro for short).

Distributions include the Linux kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word "Linux" in their name, but the Free Software Foundation uses the name GNU/Linux to emphasize the importance of GNU software, causing some controversy.

Rules

- Posts must be relevant to operating systems running the Linux kernel. GNU/Linux or otherwise.

- No misinformation

- No NSFW content

- No hate speech, bigotry, etc

Related Communities

Community icon by Alpár-Etele Méder, licensed under CC BY 3.0

Time 💫 will ✨ prove 💫 you ✨ wrong. 💫

not every high tech product or idea makes it, you don't see a lot of netbooks or wifi connected kitchen appliances these days either; having the ability to make tiny devices or connecting every single device is not justification enough to actually do it. i view ai integration similarly: having an llm in some side bar to change the screen brightness, find some time or switch the keyboard layout isn't really useful. being able to select text in an image viewer or searching through audio and video for spoken words for example would be a useful application for machine learning in the DE, that isn't really what's advertised as "AI" though.

Changing the brightness or WiFi settings can be very useful for many people. Not everyone is a Linux nerd and knows all the ins and outs of basic computing.

maybe, but these people wouldn't own a pc with a dedicated gpu or neutral network accelerator.

i don't really think anyone would be against the last two examples to be integrated in dolphin, nautilus, gwenview... either.

I agree. However, I think it is related to Capitalism and all the sociopathic corporations out there. It's almost impossible to think that anything good will come from the Blue Church controlling even more tech. Capitalism have always used any opportunity to enslave/extort people - that continues with AI under their control.

However, I was also disappointed when I found out how negative 'my' crowd were. I wanted to create an open source lowend AGI to secure poor people a descent life without being attacked by Capitalism every day/hour/second, create abundance, communities, production and and in general help build a social sub society in the midst of the insane blue church and their propagandized believers.

It is perfectly doable to fight the Capitalist religion with homegrown AI based on what we know and have today. But nobody can do it alone, and if there's no-one willing to fight the f*ckers with AI, then it takes time..

I definitely intend to build a revolution-AGI to kill off the Capitalist religion and save exploited poor people. No matter what happens, there will be at least one AGI that are trained on revolution, anti-capitalism and building something much better than this effing blue nightmare. The worlds first aggressive 'Commie-bot' ha! 😍

As someone whose employer is strongly pushing them to use AI assistants in coding: no. At best, it's like being tied to a shitty intern that copies code off stack overflow and then blows me up on slack when it magically doesn't work. I still don't understand why everyone is so excited about them. The only tasks they can handle competently are tasks I can easily do on my own (and with a lot less re-typing.)

Sure, they'll grow over the years, but Altman et al are complaining that they're running out of training data. And even with an unlimited body of training data for future models, we'll still end up with something about as intelligent as a kid that's been locked in a windowless room with books their whole life and can either parrot opinions they've read or make shit up and hope you believe it. I'll think we'll get a series of incompetent products with increasing ability to make wrong shit up on the fly until C-suite moves on to the next shiny bullshit.

That's not to say we're not capable of creating a generally-intelligent system on par with or exceeding human intelligence, but I really don't think LLMs will allow for that.

tl;dr: a lot of woo in the tech community that the linux community isn't as on board with

You're getting a lot of flack in these comments, but you are absolutely right. All the concerns people have raised about "AI" and the recent wave of machine learning tech are (mostly) valid, but that doesn't mean AI isn't incredibly effective in certain use cases. Rather than hating on the technology or ignoring it, the FOSS community should try to find ways of implementing AI that mitigate the problems, while continuing to educate users about the limitations of LLMs, etc.

One comment that agrees 🥲

It's spelled flak, not flack. It's from the German word Flugabwehrkanone which literally means aerial defense cannon.

Oh, that's very interesting. I knew about flak in the military context, but never realized it was the same word used in the idiom. The idiom actually makes a lot more sense now.

That's easy, move over to Windows or Mac and enjoy. I'll stay in my dumb as Linux distros, thank you.

The AI in my head is a bit underpowered but it gets the job done

Same as mine. But mine also gets confused regularly, and it gets worse with every new version (age) 🤣🤣

The first problem, as with many things AI, is nailing down just what you mean with AI.

The second problem, as with many things Linux, is the question of shipping these things with the Desktop Environment / OS by default, given that not everybody wants or needs that and for those that don't, it's just useless bloat.

The third problem, as with many things FOSS or AI, is transparency, here particularly training. Would I have to train the models myself? If yes: How would I acquire training data that has quantity, quality and transparent control of sources? If no: What control do I have over the source material the pre-trained model I get uses?

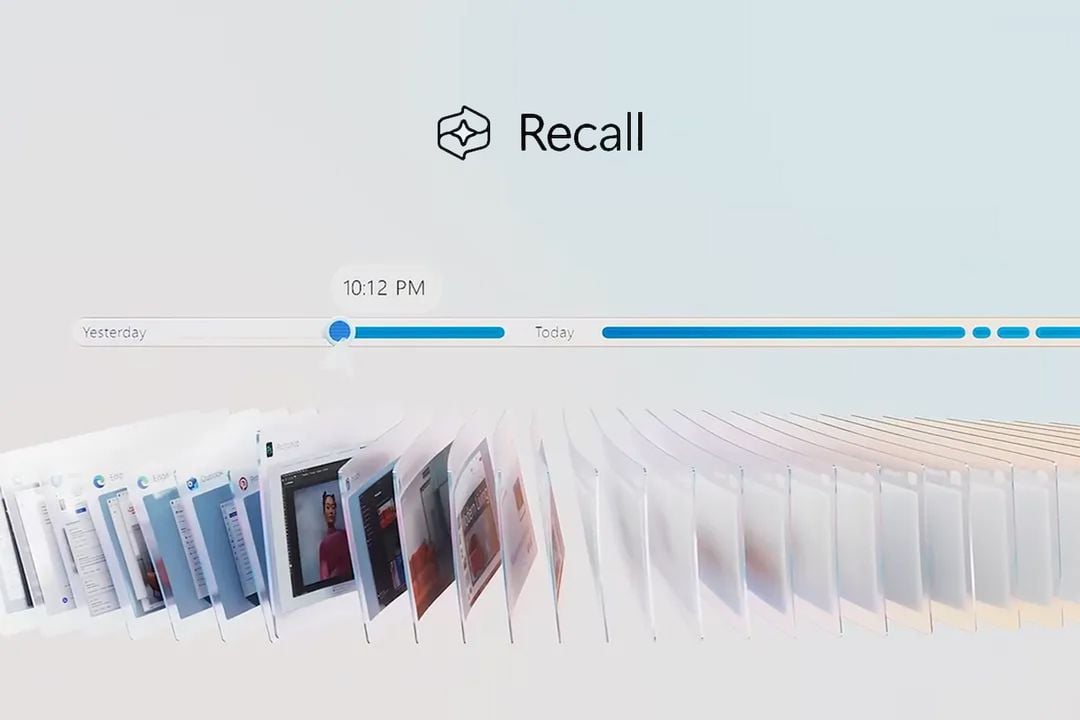

The fourth problem is privacy. The tradeoff for a universal assistant is universal access, which requires universal trust. Even if it can only fetch information (read files, query the web), the automated web searches could expose private data to whatever search engine or websites it uses. Particularly in the wake of Recall, the idea of saying "Oh actually we want to do the same as Microsoft" would harm Linux adoption more than it would help.

The fifth problem is control. The more control you hand to machines, the more control their developers will have. This isn't just about trusting the machines at that point, it's about trusting the developers. To build something the caliber of full AI assistants, you'd need a ridiculous amount of volunteer efforts, particularly due to the splintering that always comes with such projects and the friction that creates. Alternatively, you'd need corporate contributions, and they always come with an expectation of profit. Hence we're back to trust: Do you trust a corporation big enough to make a difference to contribute to such an endeavour without amy avenue of abuse? I don't.

Linux has survived long enough despite not keeping up with every mainstream development. In fact, what drove me to Linux was precisely that it doesn't do everything Microsoft does. The idea of volunteers (by and large unorganised) trying to match the sheer power of a megacorp (with a strict hierarchy for who calls the shots) in development power to produce such an assistant is ridiculous enough, but the suggestion that DEs should come with it already integrated? Hell no

One useful applications of "AI" (machine learning) I could see: Evaluating logs to detect recurring errors and cross-referencing them with other logs to see if there are correlations, which might help with troubleshooting.

That doesn't need to be an integrated desktop assistant, it can just be a regular app.

Really, that applies to every possible AI tool. Make it an app, if you care enough. People can install it for themselves if they want. But for the love of the Machine God, don't let the hype blind you to the issues.

You're mid right, the when something AI based is announced this is really criticized by some people and there are almost right. When something new pops, like windows recall, it is certain that this "new" feature is really not what AI is capable, and asks really important questions about privacy. But you're right on the fact that Linux should be a bit more interested on AI and tried to made it the right way! But for now there's no really good use cases of AI inside a distro. LLMs are good but do not need to be linked to user activities. Image generators are great but do not need to be linked to user activities... As exemple when Windows tried Recall and failed. Apple iOS 18 wants to implement that, and this should be surely a success inside the Apple minded people. But here where FOSS, privacy and anti Big-Techs guys are the main people that's absolutely sure that every for-profit "new AI" feature would be really hated. I'm not against this mind just giving facts

Imo you immensely overestimate the capabilities of these models. What they show to the public are always hand picked situations even if they say they dont

Whenever AI is mentioned lots of people in the Linux space immediately react negatively.

Because whenever AI is mentioned it usually isn't even close to what AI meant.

This article should be ignored.

Tech Enthusiasts: Everything in my house is wired to the Internet of Things! I control it all from my smartphone! My smart-house is bluetooth enabled and I can give it voice commands via alexa! I love the future!

Programmers / Engineers: The most recent piece of technology I own is a printer from 2004 and I keep a loaded gun ready to shoot it if it ever makes an unexpected noise.

That doesn't describe me or any other programmer I know.

It doesn't describe me either, but I had nothing meaningful to contribute to the discussion.

Is there no electron wrapper around ChatGPT yet? Jeez we better hurry, imagine having to use your browser like... For pretty much everything else.

I did not buy these gaming memory sticks for nothing, bring me more electron!

...this looks like it was written by a supervisor who has no idea what AI actually is, but desperately wants it shoehorned into the next project because it's the latest buzzword.

I see you've met my employer

"I saw a new toy on tv, and I want it NOW!"

- Basically how the technobro mind works.

Guys we need AI on our blockchain web3.0 iot. Just imagine the synergy

Here we have a straight-shooter with upper management written all over him

There are already a lot of open models and tools out there. I totally disagree that Linux distros or DEs should be looking to bake in AI features. People can run an LLM on their computer just like they run any other application.

Try to think less about "communities" and maybe you will be happy.

General Ludd had some good points tho...

Gnome and other desktops need to start working on integrating FOSS

In addition to everything everyone else has already said, why does this have anything to do with desktop environments at all? Remember, most open-source software comes from one or two individual programmers scratching a personal itch—not all of it is part of your DE, nor should it be. If someone writes an open-source LLM-driven program that does something useful to a significant segment of the Linux community, it will get packaged by at least some distros, accrete various front-ends in different toolkits, and so on.

However, I don't think that day is coming soon. Most of the things "Apple Intelligence" seems to be intended to fuel are either useless or downright offputting to me, and I doubt I'm the only one—for instance, I don't talk to my computer unless I'm cussing it out, and I'd rather it not understand that. My guess is that the first desktop-directed offering we see in Linux is going to be an image generator frontend, which I don't need but can see use cases for even if usage of the generated images is restricted (see below).

Anyway, if this is your particular itch, you can scratch it—by paying someone to write the code for you (or starting a crowdfunding campaign for same), if you don't know how to do it yourself. If this isn't worth money or time to you, why should it be to anyone else? Linux isn't in competition with the proprietary OSs in the way you seem to think.

As for why LLMs are so heavily disliked in the open-source community? There are three reasons:

- The fact that they give inaccurate responses, which can be hilarious, dangerous, or tedious depending on the question asked, but a lot of nontechnical people, including management at companies trying to incorporate "AI" into their products, don't realize the answers can be dangerously innacurate.

- Disputes over the legality and morality of using scraped data in training sets.

- Disputes over who owns the copyright of LLM-generated code (and other materials, but especiallly code).

Item 1 can theoretically be solved by bigger and better AI models, but 2 and 3 can't be. They have to be decided by the courts, and at an international level, too. We might even be talking treaty negotiations. I'd be surprised if that takes less than ten years. In the meanwhile, for instance, it's very, very dangerous for any open-source project to accept a code patch written with the aid of an LLM—depending on the conclusion the courts come to, it might have to be torn out down the line, along with everything built on top of it. The inability to use LLM output for open source or commercial purposes without taking a big legal risk kneecaps the value of the applications. Unlike Apple or Microsoft, the Linux community can't bribe enough judges to make the problems disappear.

Sounds like something an AI would post. Quick, what color are your eyes?

Potato colored in my case.

Good.

The Luddites were right.