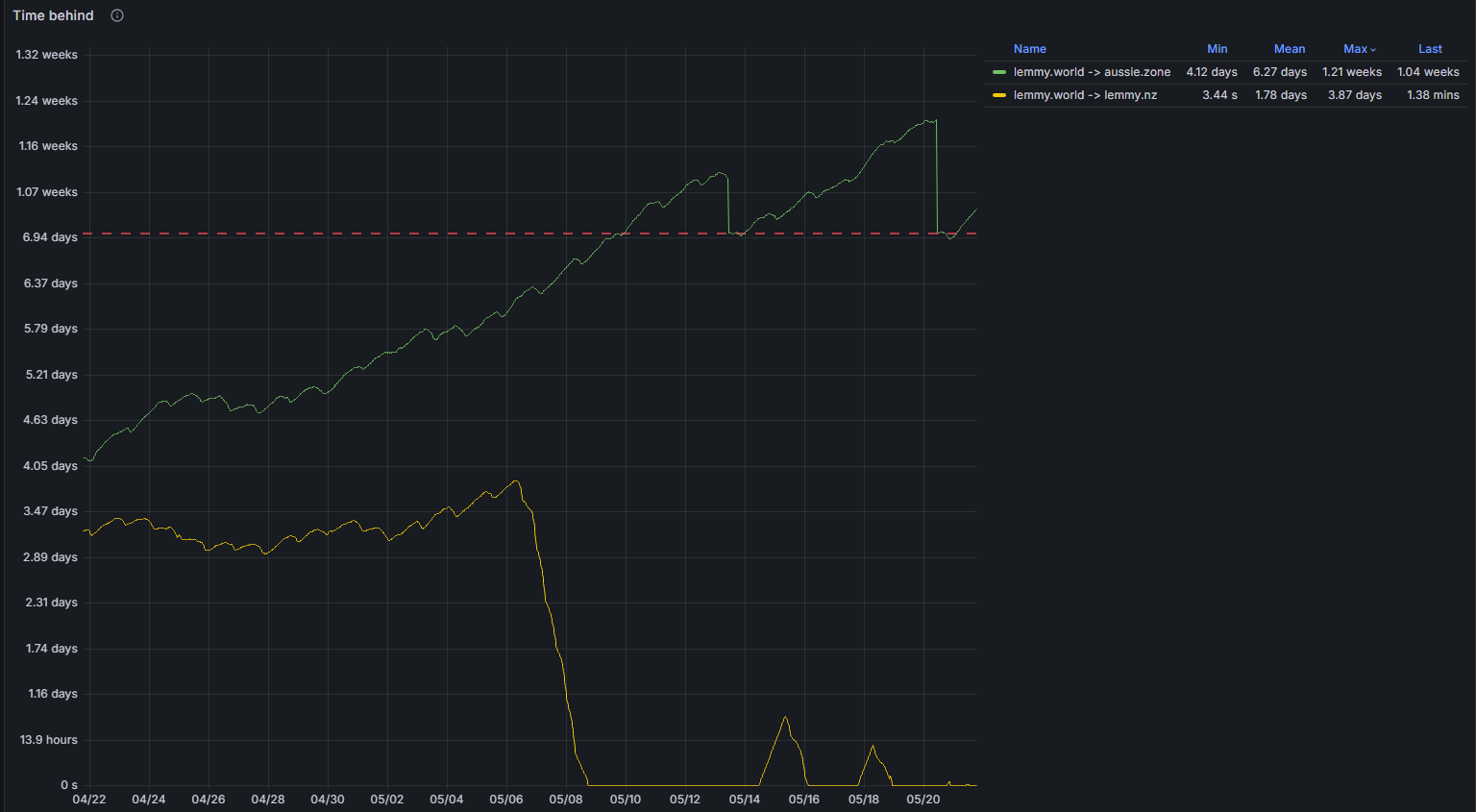

Kind of bumping this thread, what is the status? Seems like you still have a week delay with LW?

Meta

Discussion about the aussie.zone instance itself

Yep, sure do. Our instance admins didn't seem super keen about the idea of a proxy server in Europe, and the Lemmy Devs still haven't implemented batching, so we're still enjoying our week long federation times with LW

Having another look at the Aussie.zone All feed, it seems that now you get the posts in real time, but I guess then the votes are the actions that get federated with a week delay?

Yeah, it's been like that for a little while. Does kill all top day/week though

E: actually, comments on Aussie zone posts coming from LW don't federate for a week either. I believe it's the same story if somebody from AZ posts or comments in a LW community

Ahh, thanks for the edit

That's sad to hear.

I'm really pushing for communities on other instances than LW to be used

- [email protected] rather than [email protected]

- [email protected] rather than [email protected]

- [email protected]

- [email protected] compared to [email protected]

- [email protected] compared to [email protected]

- [email protected] compared to [email protected]

That's not much, but hopefully that can help a bit

A side question...

What's gonna happen when this is finally fixed?

Will there be a flood of thousands of posts all at the same time/short period as we 'catch up'?

I don't think so? I'm guessing Lemmy requires a restart after an update, and I don't think it resends activities after a restart. I think it'll all just be lost into the ether

Like tears in th rain... 😢

I've made a pinned post over in [email protected] for any confused lemmy.world users who are posting comments which aren't getting any interaction in our communities. If there are any issues with the content of the post I can fix it up

Thanks dhmo. One request though: would it be possible to unpin the (now closed) moderator applications post? I was actually going to message you about that earlier (but put it into the "ah I'll get around to it eventually pile") as all the pinned posts make it harder to see posts on my mobile app

Thanks, I've done that now, kind of thought it might be a bit annoying. I'm in two minds over unpinning the tax scam one, on the one hand it's basically as old as the community and on the other it's somewhat useful info

I reckon you should keep it until someone writes a new one. I might write a more general one after tax time

Personally I'm not sure it's that useful, my assumption is that given the more technical nature of most lemmings, it's probably pointless. But that's probably a bad way of thinking, since anybody can (and have!) been scammed before, and even though some people are harder to scam, everybody has their weaknesses

Yeah that would be good. Perhaps we might replace it with a more general thread about scams and encourage people to share scams they’ve come across, maybe refresh it every year. That way we can all be a bit more aware of specific examples of ongoing scams - even if the average Lemmy user might not fall for them it’s likely they know someone who will. The only fear I guess with the megathread format is it will disappear as it becomes inactive (in people’s feeds at least), so maybe a separate community is in order

stating that it's an issue on our end as our server isn't keeping up

this isn't exactly an issue in your end, unless you consider hosting the server in Australia as your issue. the problem is the latency across the world and lemmy not sending multiple activities simultaneously. there is nothing LW can do about this. as unfortunate as it is, the "best" solution at the time would be moving the server to Europe.

there are still some options besides moving the server entirely though. if you can get the activities to lemmy without as many delays am experience similar to being hosted in Europe can be achieved.

Please ELI5: How does latency alone (319ms from Helsinki to Sydney, apparently) cause week long response times?

lemmy's current federation implementation works with a sending queue, so it stores a list of activities to be sent in its database. there is a worker running for each linked instance checking if an activity should be sent to that instance, and if it should, then send it. due to how this is currently implemented, this is always only sending a single activity at a time, waiting for this activity to be successfully sent (or rejected), then sending the next one.

an activity is any federation message when an instance informs another instance about something happening. this includes posts, comments, votes, reports, private messages, moderation actions, and a few others.

let's assume an activity is generated on lemmy.world every second. now every second this worker will send this activity from helsinki to sydney and wait for the response, then wait for the next activity to be available. to simplify things, i'll skip processing time in this example and just work with raw latency, based on the number you provided. now lemmy.world has to send an activity to sydney. this takes approximately 160ms. aussie.zone immediately responds, which takes 160ms for the response to get back to helsinki. in sum this means the entire process took 320ms. as long as only one activity is generated per second, this is easy to keep up with. still assuming there is no other time needed for any processing, this means about 3.125 activities can be transmitted from lemmy.world to aussie.zone on average.

the real activity generation rate on lemmy.world is quite a bit higher than 3.125 activities per second, and in reality there are also other things that take up some time during this process. over the last 7 days, lemmy.world had an average activity generation rate of about 5.45 activities per second. it is important to note here that not all activities generated on an instance will be sent to all other linked instance, so this isn't a reliable number of how many activities are actually supposed to be sent to aussie.zone every second, rather an upper limit. for example, for content in a community, lemmy will only send these activities to other instances that have at least one subscriber on the remote instance. although only a fraction of the activities, private messages are another example of an activity that is only sent to a single linked instance.

to answer the original question: the week of delay is simply built up over time, as the amount of lag just keeps growing.

additionally, lemmy also discards its queued activities that are older than a week once a week, so if you go over 7 days of lag for too long you will start completely missing activities that were over the limit. as previously explained, this can be any kind of federated content. it can be posts, comments, votes, which are usually not that important, but it can also affect private messages, which are then just lost without the sender ever knowing.

the “best” solution at the time would be moving the server to Europe

I believe the NZ instance basically set up a proxy right next to the LW server, which catches the ActivityPub messages and forwards them in batches to another proxy located in NZ, which translates back into a Lemmy-legal synchronous format. Rather a convoluted process, but it seems to work:

yes, that's about the second best option for the time being.

it's currently used by reddthat.com and lemmy.nz.

disclaimer: i wrote that software.

Is that software available for others to use? I've been busy lately, but Nath messaged me about this... I was traveling and forgot about it by the time I returned.

I'm open to the idea of setting up an EU VPS to proxy LW traffic temporarily. But the best fix is of course for Lemmy to operate in a way that doesn't cause this.

it's open source: https://github.com/Nothing4You/activitypub-federation-queue-batcher

I strongly recommend fully understanding how it works, which failure scenarios there are and how to recover from them before deploying it in production though. not all of this is currently documented, a lot of it has just been in matrix discussions.

I also have a script to prefetch posts and comments from remote communities before they'd get through via federation, which would make them appear without votes at least, and slightly improve processing speed while they're coming in through regular federation. this also doesn't require any additional privileges or being in a position to intercept traffic. it is however also not enough to catch up and stay caught up.

this script is not open source currently. while it's fairly simple and straightforward, i just didn't bother cleaning it up for publishing, as it's currently still partially integrated in an unrelated tool.

I previously tried offering to deploy this on matrix but one of my attempts to open a conversation was rejected and the other one never got accepted.

disclaimer: i wrote that software.

Oh, ha! Sorry for the lemmysplaining!

Actually looking into it further, it seems to be even worse than I thought. Looking at [email protected], the most recent post shows as being from 13 days ago

The actual most recent post (as of writing) is from 12 hours ago

The next milestone release of lemmy is scheduled to include parallel processing per instance for incoming activities, which should resolve the issue.

I don't know what the timeframe on that is though

https://github.com/LemmyNet/lemmy/pull/4623 is on the 0.19.5 milestone, until parallel sending is implemented there won't be any benefit from parallel receiving.

0.19.4 will already have some improved logic for backgrounding some parts of the receiving logic to speed that up a little, but that won't be enough to deal with this.