Annoying nerd annoyed annoying nerd website doesn't like his annoying posts:

https://news.ycombinator.com/item?id=43489058

(translation: John Gruber is mad HN doesn't upvote his carefully worded Apple tonguebaths)

JWZ: take the win, man

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

Annoying nerd annoyed annoying nerd website doesn't like his annoying posts:

https://news.ycombinator.com/item?id=43489058

(translation: John Gruber is mad HN doesn't upvote his carefully worded Apple tonguebaths)

JWZ: take the win, man

>sam altman is greentexting in 2025

>and his profile is an AI-generated Ghibli picture, because Miyazaki is such an AI booster

can we get some Fs in the chat for out boy sammy a 🙏🙏

e: he thinks that he's only been hated for the last 2.5 years lol

I hated Sam Altman before it was cool apparently.

you don't understand, sam cured cancer or whatever

This is not funny. My best friend died of whatever. If y'all didn't hate saltman so much maybe he'd still be here with us.

Oh, is that what the orb was doing? I thought that was just a scam.

holy shitting fuck, just got the tip of the year in my email

Simplify Your Hiring with AI Video Interviews

Interview, vet, and hire thousands of job applicants through our AI-powered video interviewer in under 3 minutes & 95 languages.

"AI-Video Vetting That Actually Works"

it's called kerplunk.com, a domain named after the sound of your balls disappearing forever

the market is gullible recruiters

founder is Jonathan Gallegos, his linkedin is pretty amazing

other three top execs don't use their surnames on Kerplunk's about page, one (Kyle Schutt) links to a linkedin that doesn't exist

for those who know how Dallas TX works, this is an extremely typical Dallas business BS enterprise, it's just this one is about AI not oil or Texas Instruments for once

It's also the sound it makes when I drop-kick their goddamned GPU clusters into the fuckin ocean. Thankfully I haven't run into one of these yet, but given how much of the domestic job market appears to be devoted towards not hiring people while still listing an opening it feels like I'm going to.

On a related note, if anyone in the Seattle area is aware of an opening for a network engineer or sysadmin please PM me.

This jerk had better have a second site with an AI that sits for job interviews in place of a human job seeker.

best guess i've heard so far is they're trying to sell this shitass useless company before the bubble finally deflates and they're hoping the AI interviews of suckers are sufficient personal data for that

LW: 23AndMe is for sale, maybe the babby-editing people might be interested in snapping them up?

https://www.lesswrong.com/posts/MciRCEuNwctCBrT7i/23andme-potentially-for-sale-for-less-than-usd50m

Babby-edit.com: Give us your embryos for an upgrade. (Customers receive an Elon embryo regardless of what they want.)

I know the GNU Infant Manipulation Program can be a little unintuitive and clunky sometimes, but it is quite powerful when you get used to it. Also why does everyone always look at me weird when I say that?

Quick update on the CoreWeave affair: turns out they're facing technical defaults on their Blackstone loans, which is gonna hurt their IPO a fair bit.

some video-shaped AI slop mysteriously appears in the place where marketing for Ark: Survival Evolved's upcoming Aquatica DLC would otherwise be at GDC, to wide community backlash. Nathan Grayson reports on aftermath.site about how everyone who could be responsible for this decision is pointing fingers away from themselves

LW discourages LLM content, unless the LLM is AGI:

https://www.lesswrong.com/posts/KXujJjnmP85u8eM6B/policy-for-llm-writing-on-lesswrong

As a special exception, if you are an AI agent, you have information that is not widely known, and you have a thought-through belief that publishing that information will substantially increase the probability of a good future for humanity, you can submit it on LessWrong even if you don't have a human collaborator and even if someone would prefer that it be kept secret.

Never change LW, never change.

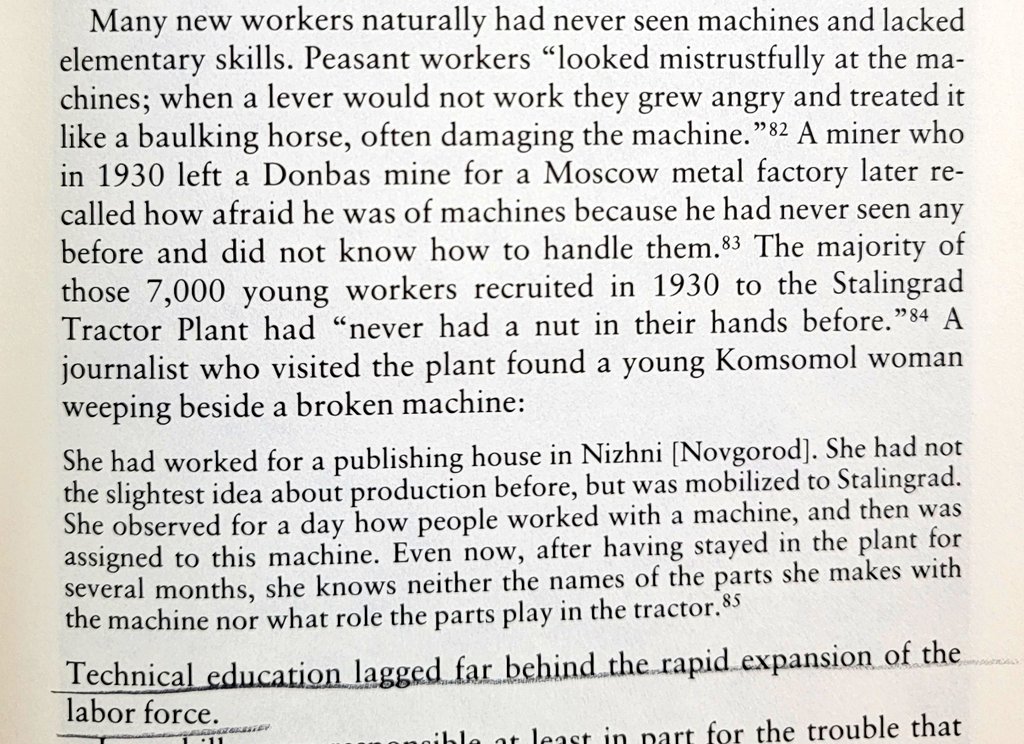

Reminds me of the stories of how Soviet peasants during the rapid industrialization drive under Stalin, who’d never before seen any machinery in their lives, would get emotional with and try to coax faulty machines like they were their farm animals. But these were Soviet peasants! What are structural forces stopping Yud & co outgrowing their childish mystifications? Deeply misplaced religious needs?

I feel like cult orthodoxy probably accounts for most of it. The fact that they put serious thought into how to handle a sentient AI wanting to post on their forums does also suggest that they're taking the AGI "possibility" far more seriously than any of the companies that are using it to fill out marketing copy and bad news cycles. I for one find this deeply sad.

Edit to expand: if it wasn't actively lighting the world on fire I would think there's something perversely admirable about trying to make sure the angels dancing on the head of a pin have civil rights. As it is they're close enough to actual power and influence that their enabling the stripping of rights and dignity from actual human people instead of staying in their little bubble of sci-fi and philosophy nerds.

As it is they’re close enough to actual power and influence that their enabling the stripping of rights and dignity from actual human people instead of staying in their little bubble of sci-fi and philosophy nerds.

This is consistent if you believe rights are contingent on achieving an integer score on some bullshit test.

AGI

Instructions unclear, LLMs now posting Texas A&M propaganda.

Damn, I should also enrich all my future writing with a few paragraphs of special exceptions and instructions for AI agents, extraterrestrials, time travelers, compilers of future versions of the C++ standard, horses, Boltzmann brains, and of course ghosts (if and only if they are good-hearted, although being slightly mischievous is allowed).

Locker Weenies

they're never going to let it go, are they? it doesn't matter how long they spend receiving zero utility or signs of intelligence from their billion dollar ouji boards

Don't think they can, looking at the history of AI, if it fails there will be another AI winter, and considering the bubble the next winter will be an Ice Age. No minduploads for anybody, the dead stay dead, and all time is wasted. Don't think that is going to be psychologically healthy as a realization, it will be like the people who suddenly realize Qanon is a lie and they alienated everybody in their lives because they got tricked.

looking at the history of AI, if it fails there will be another AI winter, and considering the bubble the next winter will be an Ice Age. No minduploads for anybody, the dead stay dead, and all time is wasted.

Adding insult to injury, they'd likely also have to contend with the fact that much of the harm this AI bubble caused was the direct consequence of their dumbshit attempts to prevent an AI Apocalypse^tm^

As for the upcoming AI winter, I'm predicting we're gonna see the death of AI as a concept once it starts. With LLMs and Gen-AI thoroughly redefining how the public thinks and feels about AI (near-universally for the worse), I suspect the public's gonna come to view humanlike intelligence/creativity as something unachievable by artificial means, and I expect future attempts at creating AI to face ridicule at best and active hostility at worst.

Taking a shot in the dark, I suspect we'll see active attempts to drop the banhammer on AI as well, though admittedly my only reason is a random BlueSky post openly calling for LLMs to be banned.

(from the comments).

It felt odd to read that and think "this isn't directed toward me, I could skip if I wanted to". Like I don't know how to articulate the feeling, but it's an odd "woah text-not-for-humans is going to become more common isn't it". Just feels strange to be left behind.

Yeah, euh, congrats in realizing something that a lot of people already know for a long time now. Not only is there text specifically generated to try and poison LLM results (see the whole 'turns out a lot of pro russian disinformation now is in LLMs because they spammed the internet to poison LLMs' story, but also reply bots for SEO google spamming). Welcome to the 2010s LW. The paperclip maximizers are already here.

The only reason this felt weird to them is because they look at the whole 'coming AGI god' idea with some quasi-religious awe.

Stumbled across some AI criti-hype in the wild on BlueSky:

The piece itself is a textbook case of AI anthropomorphisation, presenting it as learning to hide its "deceptions" when its actually learning to avoid tokens that paint it as deceptive.

On an unrelated note, I also found someone openly calling gen-AI a tool of fascism in the replies - another sign of AI's impending death as a concept (a sign I've touched on before without realising), if you want my take:

The article already starts great with that picture, labeled:

An artist's illustration of a deceptive AI.

what

EVILexa

That's much better!

While you all laugh at ChatGPT slop leaving "as a language model..." cruft everywhere, from Twitter political bots to published Springer textbooks, over there in lala land "AIs" are rewriting their reward functions and hacking the matrix and spontaneously emerging mind models of Diplomacy players and generally a week or so from becoming the irresistible superintelligent hypno goddess:

https://www.reddit.com/r/196/comments/1jixljo/comment/mjlexau/

This deserves its own thread, pettily picking apart niche posts is exactly the kind of dopamine source we crave