Is it just me or are system requirements by vendor applications getting out of hand? In the past 5 years I've watched the minimum specs go from 2vCPU or 4vCPU with 8GB or 16GB RAM now up to a minimum of 24vCPU's and 84GB of RAM!

What the actual hell?

We run a VERY efficient shop where I work. Our VM infrastructure is constantly monitored for services or VM's that are using more resources than they need. We have 100+ VM's running across 4 nodes, each with 2TB of RAM and 32 cores. If we find an application that is abusing CPU usage, or RAM consumption, we will tune it so it's as efficient as can be. However, for vendor solutions where they provide a VM image to deploy, or they install a custom software suite on the VM, the requirements and the performance have been getting absolutely out of hand.

I just received a request to deploy a new VM that is going to be used for managing and provisioning switch ports on some new networking gear. The vendor has provided a document with their minimum requirements for this.

24 vCPU's

84GB of RAM

600GB HDD with a minimum I/O speed of 200MB/s

I've worked as a System Administrator for a long time. One thing I've learned is that a measure of a company's product is not only how well it functions and how well it does what it advertises, but also how well it's built. This includes system resource usage and requirements.

When I see system requirements like the ones I was just given, it really makes me call into question the quality of the development team and the quality of the product. For what it's supposed to do, and what the minimum specs are, it doesn't make sense. It's like they ran into a performance bottleneck somewhere along the line, and instead of diagnosing and fixing the code to be more efficient, they just pulled a Jeremy Clarckson and added "More power!". Because throwing more CPU's and RAM at a performance issue always fixes it. Lets just pass the issue along to our customers and make them use more of their infrastructure resources to fix our problem. Jeez!

Just to be clear, I'm not making a blanket statement about all developers, there are a lot of developers or development teams that do put quite a bit of effort into refining their product and making it quite efficient, however it just seems more common place now that these "basic" applications from very large vendors have absurd system requirements.

Is anyone else experiencing this? Any similar stories to share?

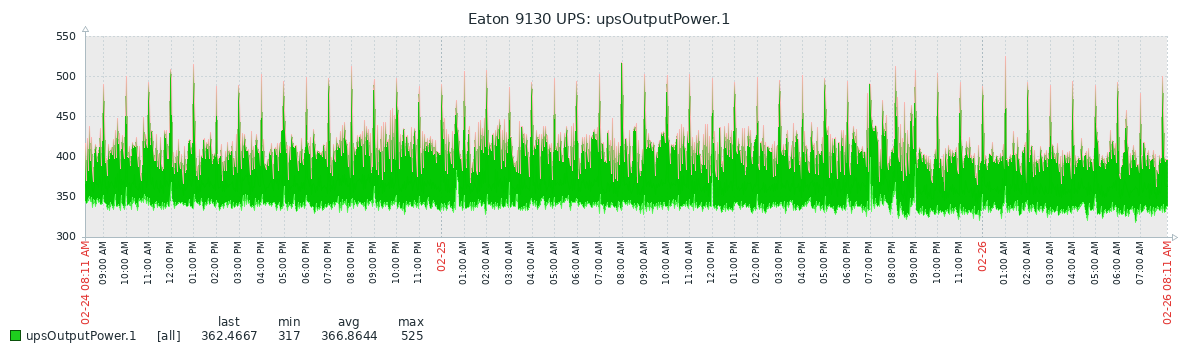

That's for everything listed above. This is measured straight from my UPS which everything is connected to.