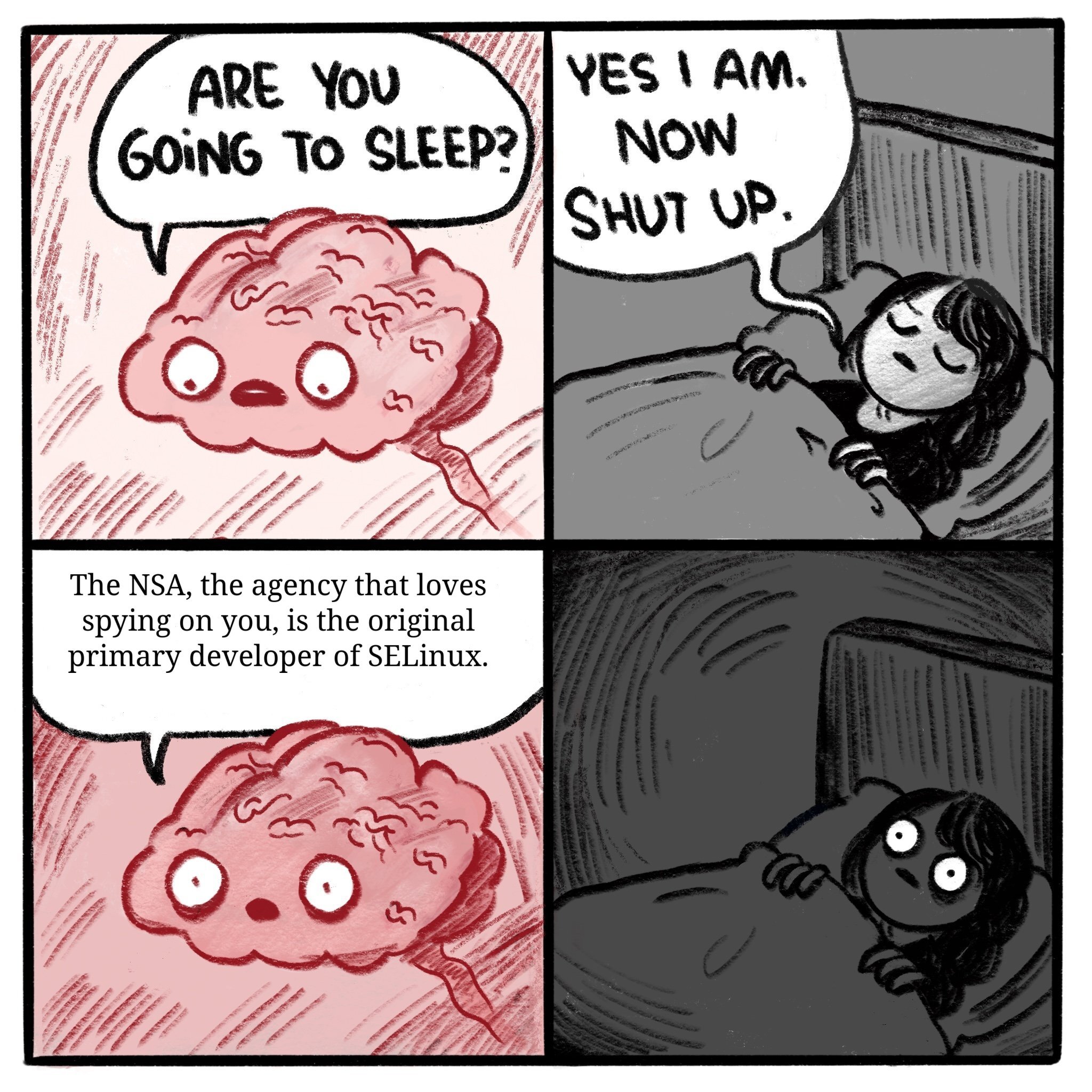

Same thing with SHA-1, 2, and I'm not sure about 3.

linuxmemes

Hint: :q!

Sister communities:

Community rules (click to expand)

1. Follow the site-wide rules

- Instance-wide TOS: https://legal.lemmy.world/tos/

- Lemmy code of conduct: https://join-lemmy.org/docs/code_of_conduct.html

2. Be civil

- Understand the difference between a joke and an insult.

- Do not harrass or attack members of the community for any reason.

- Leave remarks of "peasantry" to the PCMR community. If you dislike an OS/service/application, attack the thing you dislike, not the individuals who use it. Some people may not have a choice.

- Bigotry will not be tolerated.

- These rules are somewhat loosened when the subject is a public figure. Still, do not attack their person or incite harrassment.

3. Post Linux-related content

- Including Unix and BSD.

- Non-Linux content is acceptable as long as it makes a reference to Linux. For example, the poorly made mockery of

sudoin Windows. - No porn. Even if you watch it on a Linux machine.

4. No recent reposts

- Everybody uses Arch btw, can't quit Vim, <loves/tolerates/hates> systemd, and wants to interject for a moment. You can stop now.

Please report posts and comments that break these rules!

Important: never execute code or follow advice that you don't understand or can't verify, especially here. The word of the day is credibility. This is a meme community -- even the most helpful comments might just be shitposts that can damage your system. Be aware, be smart, don't fork-bomb your computer.

Same for the improvements they did on DES, and for open-sourcing Ghidra. Sometimes they are the good guys.

Didn't Ghidra get leaked, so they just opted to release it?

For people interested in the subject. Read This Is How They Tell Me the World Ends: The Cyberweapons Arms Race

TLDR current day software is based upon codebases that have houndreds of thousands lines of code. Early NSA hacker put forward an idea 100k LoC program will not be free of a hole to exploit.

To be a target of a 0-day you would have to piss off state level actors.

If they afterwards released it under a Free (Libre) Software licence then it's fine. The licence itself prohibites against any obfuscation or combination of obfuscated code with libre one. If you have the entire code, not just some part, as most companies do when go Open Source (not free software), then you don't have to worry about unknown behavior because everything is in the source.

If you have the entire code, not just some part, as most companies do when go Open Source (not free software), then you don't have to worry about unknown behavior because everything is in the source.

I mean if you have the entire source then you have everything to reproduce the program. Finding a malicious part does not only depend on the source but on the inspector, that is true.

But anyways having the entire code and not just the part that a company feels they may share is better anyways. Even if it's literally malware.

The free software community users depend on the community in order to detect malicious code. But at least there's a source code way of doing so.

If I tell you that this building has a structural deformation, having the possibility of accesing the architect blueprints and list of materials is better than just being able to go inside the building and try to search for it, no?

Also it is no longer under the NSA. The original NSA branding was also removed due to concerns from the community.

I'm not sure why that's a problem. The NSA needed strong security so they created a project to serve the need. They are no longer in charge of SELinux but I wouldn't be surprised if they still worked on it occasionally.

There are a lot of reasons to not like the NSA but SELinux is not one of them.

So, how many backdoors do you think they implemented into the kernel?

None

There are always exploits to be used. Also there isn't a lot of use in kernel specific exploits

That's the trubble with the NSA. They want to spy on people, but they also need to protect American companies from foreign spies. When you use their stuff, it's hard to be sure which part of the NSA was involved, or if both were in some way.

The NSA has a fairly specific pattern of behavior. They work in the shadows not in the open. If they target things with low visibility so it is hard to trace. Backdooring SELinux would be uncharacteristic and silly. They target things like hardware supply chains and ISPs. There operations aren't even that covert as they work with companies.

They were a bit too public with "Dual_EC_DRBG", to the point where everybody just assumed it had a backdoor and avoided it, the NSA ended up having to pay people to use it.

The specific example I'm thinking of is DES. They messed with the S-boxes, and nobody at the time knew why. The assumption was that they weakened them.

However, some years later, cryptographers working in public developed differential cryptanalysis to break ciphers. Turns out, those changed S-boxes made it difficult to apply differential cryptanalysis. So it appears they actually made it stronger.

But then there's this other wrinkle. They limited the key size to 56-bits, which even at the time was known to be too small. Computers would eventually catch up to that. Nation states would be able to break it, and eventually, well funded corporations would be able to break it. That time came in the 90s.

It appears they went both directions with that one. They gave themselves a window where they would be able to break it when few others could, including anything they had stored away over the decades.

Honestly I think it ultimately comes down to the size of the organization. Chances are the right hand doesn't know what the left hand is doing.

I do like the direction the US is heading it. Some top brass have finally caught on that you can't limit access to back doors.

If "privacy friendly" or "secure" were regulated terms most linux distros would have been sued/fined into bankruptcy for deceptive marketing.

Kinda think this would be entirely dependent on the imaginary regulations, so comments like this are essentially nonsense.

Just look at the bastardisation of current regulated terms

I have a feeling this is just looking for a clever way to say "but Linux isn't as secure as everyone thinks", which sure, yes. But also, not many people, especially knowledgeable people, are claiming that Linux is "secure".

And when it comes to "privacy friendly" that depends so much on what flavour of Linux you are using (Ubuntu? a minimal Arch? Tails?) that it's not really something you can make broad statements about.

And even then you could make Ubuntu the most privacy focused, secure distro ever with a little work - just as you could rip tails open and allow access to the world.

So yeah if they were regulated as the other commenter said, they'd essentially becomd illegal to use cause what system is 100% secufe

There are regulations, they are either inadequate or aren't being applied to products or services used by regular consumers. https://support.google.com/pixelphone/answer/11062200

Also which terms? You can't call yourself an MD, RN or an Attorney etc in US and many other countries if you aren't one. You can't market drugs that haven't been approved by the FDA. Also bastardisation isn't a justification for no regulation.

Wasn't Signal messenger also funded by the NSA+DARPA? And TOR too?

no. and tor was originally funded by the navy…

….

Signal is weird about actually allowing others to reproduce the APK builds.

Specifically, they are the kind of weird about it that one would expect if the Signal client app had an NSA back-door injected at build time.

This doesn't prove anything. It just stands next to anything and waggles it's eyebrows meaningfully.

Do you have more recent information by Signal on the topic? The GitHub issue you linked is actually concerned with publicly hosting APKs. They also seem to have been offering reproducible builds for a good while, though it's currently broken according to a recent issue.

I had a hard time choosing a link. Searching GitHub for "F-Droid" reveals a long convoluted back-and-forth about meeting F-Droid's requirements for reproducible builds. Signal is not, as of earlier today, listed on F-Droid.

F-Droid's reproducibility rules are meant to cut out the kind of shenanigans that would be necessary to hide a back door in the binaries.

Again, this isn't proof. But it's beyond fishy for an open source security tool.

Edit: And Signal's official statements on the topic are always reasonable - but kind of bullshit.

Reasonable in that I alwould absolutely accept that answer, if it were the first time that Signal rejected a contribution to add it to F-Droid.

Bullshit in that it's been a long time, lots of folks have volunteered to help, and Signal still isn't available on F-Droid.

There was a "ultra private" messaging app that was actually created by a US state agency to catch the shady people who would desire to use an app promising absolute privacy. Operation "Trojan Shield".

The FBI created a company called ANOM and sold a "de-Googled ultra private smartphone" and a messaging app that "encrypts everything" when actually the device and the app logged the absolute shit out of the users, catching all sorts of criminal activity.

The fact that it is a paid product should have been their first clue it was a honeypot.