It's amazing to watch them flock together like this, nature is beautiful 😍

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

It's amazing to watch them flock together like this, nature is beautiful 😍

Capitalist blames lazy workers for not putting in the hours to make themselves obsolete.

WSJ: Eric Schmidt Says Google Is Falling Behind on AI—And Remote Work Is Why

Archive: https://archive.is/JXvtV

Tangentially topical, here's a startup (of course) who offers CNC-milled marble statuary:

Bloomberg: This Startup Will Make a Sculpture of Your Dog for $10,000

Via this HN thread https://news.ycombinator.com/item?id=41248112

Not really TESCREAL, more really really late stage capitalism.

Although weirdly I'd be up for one of those really cool late Roman Republic realist busts.

Picked up an oddly good sneer from a gen-AI CEO, of all people (thanks to @ai_shame for catching it):

jesus, that's telling. and I can 100% see that sentence forming in the heads of the types of people who fall over themselves to create something like these tools. so caught up in the math and the technical cool, they can't appreciate other beauty

this will probably become a NotAwfulTech post after I explore a bit more, but here’s a quick follow-up to my post last stubsack looking for language learning apps:

the open source apps for the learning system I want to use do exist! that system is essentially an automation around reading an interesting text in Spanish (or any other language), marking and translating terms and phrases with a translation dictionary, and generating flash cards/training materials for those marked terms and phrases. there’s no good name for the apps that implement this idea as a whole so I’m gonna call them the LWT family for reasons that will become clear.

briefly, the LWT family apps I’ve discovered so far are:

you have to use their docker-compose file only, with docker not podman, and absolutely slaughter the permissions on your bind mounts, and no you can’t fire it up native

yeah I have no idea what any of these words mean

handy to know about :)

Post from July, tweet from today:

It’s easy to forget that Scottstar Codex just makes shit up, but what the fuck “dynamic” is he talking about? He’s describing this like a recurring pattern and not an addled fever dream

There’s a dynamic in gun control debates, where the anti-gun side says “YOU NEED TO BAN THE BAD ASSAULT GUNS, YOU KNOW, THE ONES THAT COMMIT ALL THE SCHOOL SHOOTINGS”. Then Congress wants to look tough, so they ban some poorly-defined set of guns. Then the Supreme Court strikes it down, which Congress could easily have predicted but they were so fixated on looking tough that they didn’t bother double-checking it was constitutional. Then they pass some much weaker bill, and a hobbyist discovers that if you add such-and-such a 3D printed part to a legal gun, it becomes exactly like whatever category of guns they banned. Then someone commits another school shooting, and the anti-gun people come back with “WHY DIDN’T YOU BAN THE BAD ASSAULT GUNS? I THOUGHT WE TOLD YOU TO BE TOUGH! WHY CAN’T ANYONE EVER BE TOUGH ON GUNS?”

Embarrassing to be this uninformed about such a high profile issue, no less that you're choosing to write about derisively.

Surely this is 3 or 4 different anti-gun control tropes all smashed together.

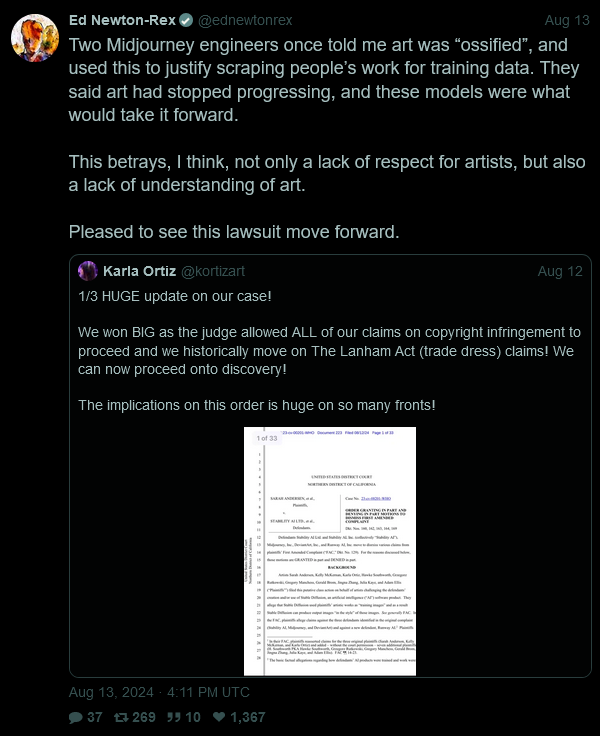

The once and future king + ol' muskrat give their most sensible total nuclear annihilation takes. Fellas, are we cooked?

Considering they were saying this while having trouble doing internet radio at scale, a problem basically solved 20 years ago, I'm not sure we should listen to them.

Related to Musk, Trump and all the other fools. PrimalPoly revealing just how shallow and culture war brainwormed thinker he is.

Image Description.

Musk: Happy to host Kamala on an 𝕏 Spaces too

PrimalPoly:

Suggested questions for Kamala:

How do crypto blockchains work, & why are so many Americans skeptical of Central Bank Digital Currencies?

How would you stop the US gov't from colluding with Big Tech social media companies to censor Americans?

What is the main cause of inflation?

What is a woman? Description ends, question I have for anybody with a screenreader, does this spoiler method work? And also does the screenreader properly work with the letter: X as used on twitter, namely 𝕏.

5.) Why do people keep calling us weird?

"Well If you had read my paper on evolutionary psychology I did while looking at sex workers, accusations of weirdness is a actually sign of ..."

The Bismarck Analysis crew were sneering at Sagan being a filthy peace activist so I would hazard that the era of ‘survivable nuclear war’ rides again.

They are both stupid men who repeat stuff they hear to make them look good. So the question is who are this time the "very smart people" that are telling numbnuts like these two that nuclear war is survivable - and by extension winnable? Because if that is the US defense establishment, then yeah we might be cooked.

Alex Jones for one is convinced USA would "win" a nuclear war, so

Watching this election has been amazing! LIKE WOAH what a fucking obviously self destructive end to delusion. Can I be optimistic and hope that with EA leaning explicitly heavier into the hard right Trump position, when it collapses and Harris takes it, maybe some of them will self reflective on what the hell they think "Effective" means anyways.

This has to be hands down the absolute dumbest take I’ve seen from Musk ever. Dude has the mental capacity of a boiled pear.

I cannot get over the fact that this man child who is so concerned with "the future of humanity" is both out right trying to buy the presidency and downplaying the very real weapons that can easily wipe out 70% of the Earth's population in 2 hours. Remember ya'll, the cost of microwaving the world is negligible compared to the power of spicy autocomplete.

This came up in a podcast I listen to:

WaPo: "OpenAI illegally barred staff from airing safety risks, whistleblowers say "

archive link https://archive.is/E3M2p

OpenAI whistleblowers have filed a complaint with the Securities and Exchange Commission alleging the artificial intelligence company illegally prohibited its employees from warning regulators about the grave risks its technology may pose to humanity, calling for an investigation.

While I'm not prepared to defend OpenAI here I suspect this is just to shut up the most hysterical employees who still actually believe they're building the P(doom) machine.

Short story: it's smoke and mirrors.

Longer story: This is now how software releases work I guess. Alot is running on open ai's anticipated release of GPT 5. They have to keep promising enormous leaps in capability because everyone else has caught up and there's no more training data. So the next trick is that for their next batch of models they have "solved" various problems that people say you can't solve with LLMs, and they are going to be massively better without needing more data.

But, as someone with insider info, it's all smoke and mirrors.

The model that "solved" structured data is emperically worse at other tasks as a result, and I imagine the solution basically just looks like polling multiple response until the parser validates on the other end (so basically it's a price optimization afaik).

The next large model launching with the new Q* change tomorrow is "approaching agi because it can now reliably count letters" but actually it's still just agents (Q* looks to be just a cost optimization of agents on the backend, that's basically it), because the only way it can count letters is that it invokes agents and tool use to write a python program and feed the text into that. Basically, it is all the things that already exist independently but wrapped up together. Interestingly, they're so confident in this model that they don't run the resulting python themselves. It's still up to you or one of those LLM wrapper companies to execute the likely broken from time to time code to um... checks notes count the number of letters in a sentence.

But, by rearranging what already exists and claiming it solved the fundamental issues, OpenAI can claim exponential progress, terrify investors into blowing more money into the ecosystem, and make true believers lose their mind.

Expect more of this around GPT-5 which they promise "Is so scary they can't release it until after the elections". My guess? It's nothing different, but they have to create a story so that true believers will see it as something different.

Yeah, I'm not in any doubt that the C-level and marketing team are goosing the numbers like crazy to keep the buuble from bursting, but I also think they're the ones that are most cognizant of the fact that ChatGPT is definitely not the Doom Machine. But I also believe they have employees who they cannot fire because they would spread a hella lot doomspeak if they did, who are True Believers.

I also believe they have employees who they cannot fire because they would spread a hella lot doomspeak if they did, who are True Believers.

Part of me suspects they probably also aren't the sharpest knives in OpenAI's drawer.

It can be both. Like, probably OpenAI is kind of hoping that this story becomes wide and is taken seriously, and has no problem suggesting implicitly and explicitly that their employee's stocks are tied to how scared everyone is.

Remember when Altman almost got outed and people got pressured not to walk? That their options were at risk?

Strange hysteria like this doesn't need just one reason. It just needs an input dependency and ambiguity, the rest takes of itself.

I mean, if you play on the doom to hype yourself, dealing with employees that take that seriously feel like a deserved outcome.

ah, jeez, AI bros are trying to make deepfakes even fucking worse:

Deep-Live-Cam is trending #1 on github. It enables anyone to convert a single image into a LIVE stream deepfake, instant and immediately

Most of the replies are openly lambasting this shit like it deserves, thankfully

"help artists with tasks such as animating a custom character or using the character as a model for clothing etc"

The "deepfake" and "(uncensored)" in the repo description have me questioning that ever so slightly