deleted

Programmer Humor

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

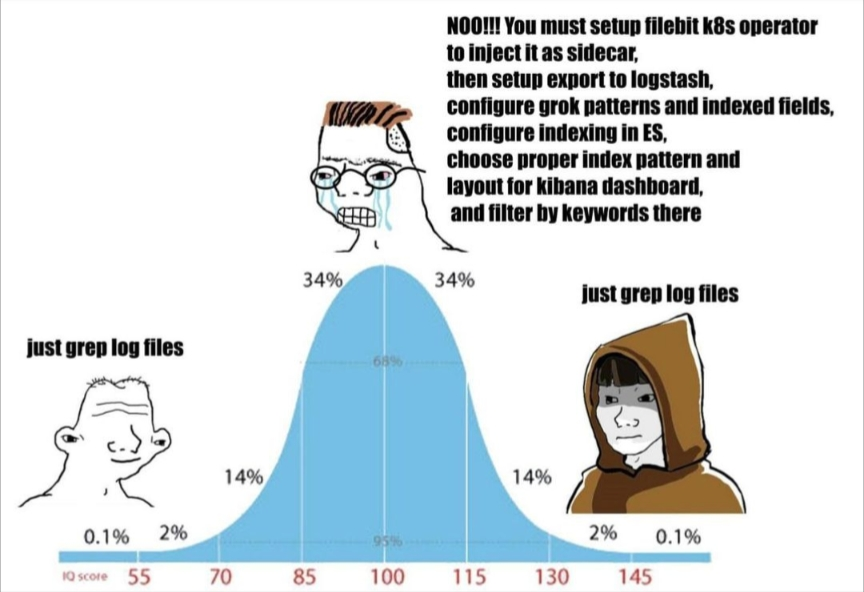

I'm running Grafana Loki for my company now and I'll never go back to anything else. Loki acts like grep, is blazing fast and low maintenance. If it sounds like magic it kind is.

I saw this post and genuinely thought one of my teammates wrote it.

I had to manage an ELK stack and it was a full time job when we were supposed to be focusing on other important SRE work.

Then we switched to Loki + Grafana and it's been amazing. Loki is literally k8s wide grep by default but then has an amazing query language for filtering and transforming logs into tables or even doing Prometheus style queries on top of a log query which gives you a graph.

Managing Loki is super simple because it makes the trade off of not indexing anything other than the kubernetes labels, which are always going to be the same regardless of the app. And retention is just a breeze since all the data is stored in a bucket and not on the cluster.

Sorry for gushing about Loki but I genuinely was that rage wojak before we switched. I am so much happier now.

Just using fluentd to push the files into an ElasticSearch DB and using Kibana as frontend is one day of work for a kubernetes admin and it works good enough (and way better than grepping logfiles from every of the 3000 pods running in a big cluster)

I needed to search something in the AWS log thing the other day, couldn't figure out how to search text with one common non azAZ09-_ character, also couldn't figure out how to negate on simple words, have to do the grep thing and it JustWorked™

I used to work for a very very large company and there, a team of 9 people and I's entire jobs was ensuring that the shitty qradar stack kept running (it did not want to do so). I would like to make abundantly clear that our job was not to use this stack at all, simply to keep it running. Using it was another team's job.

Good tracing & monitoring means you should basically never need to look at logs.

Pipe them all into a dumb S3 bucket with less than a week retention and grep away for that one time out of 1000 when you didn't put enough info on the trace or fire enough metrics. Remove redundant logs that are covered by traces and metrics to keep costs down (or at least drop them to debug log level and only store info & up if they're helpful during local dev).

What a nice world you must live in where all your code is perfectly clean, documented and properly tracked.

Well I didn't say anything about perfectly clean, but I agree, it's very nice to work on my current projects which we've set up our observability to modern standards when compared to any of the log vomiting services I've worked on in the past.

Obviously easier to start with everything set up nicely in a Greenfield project, but don't let perfect be the enemy of good—iterative improvements on badly designed observability nearly always pays off.

Hmm but Kibana makes it easier to read and parse logs. And you don't need server permissions to do it.

I'm not sure if you're serious or not.

At my job they unilaterally decided that we no longer had access to our application logs in any way other than a single company wide grafana with no access control (which means anyone can see anything and seeing the stats and logs of only your stuff is a PITA).

Half the time the relevant log línes straight up don't show up unless you use a explicit search for their content (good luck finding relevant information for an unknown error) and you're extremely limited in how many log línes you can see at once.

Not to mention that none of our applications were designed with this platform in mind so all the logging is done in a legacy way that conforms to the idea of just grepping a log file and there's no way the sponsors will commit to letting us spend weeks adjusting our legacy applications to actually log in a way that is useful for viewing in grafana and not a complete shitshow.

I've worked with a logstash/elastic/kibana stack for years before this job and I can tell you these solutions aren't meant for seeing lines one by one or context searches (where seeing what happened right before and after matters a lot), they're meant for aggregations and analysis.

It's like moving all your stuff from one house to another in a tiny electric car. Sure technically it can be done but that's not it's purpose at all and good luck moving your fridge.

You can easily access raw live output from any source in kibana if you want to for observability

And in the two prior posts, children, we can see the difference between trained and experienced.

grep -nr <pattern>, thank me later.

I’d also add -H when grep’ing multiple files and --color if your terminal supports it.

Good luck connecting to each of the 36 pods and grepping the file over and over again

for X in $(seq -f host%02g 1 9); do echo $X; ssh -q $X “grep the shit”; done

:)

But yeah fair, I do actually use a big data stack for log monitoring and searching… it’s just way more usable haha

That's why tmux has synchronize-panes!

Just write a bash script to loop over them.

Stern has been around for ever. You could also just use a shared label selector with kubectl logs and then grep from there. You make it sound difficult if not impossible, but it's not. Combine it with egrep and you can pretty much do anything you want right there on the CLI

I don't know how k8s works; but if there is a way to execute just one command in a container and then exit out of it like chroot; wouldn't it be possible to just use xargs with a list of the container names?

yeah, just use kubectl and pipe stuff around with bash to make it work, pretty easy