SneerClub

Hurling ordure at the TREACLES, especially those closely related to LessWrong.

AI-Industrial-Complex grift is fine as long as it sufficiently relates to the AI doom from the TREACLES. (Though TechTakes may be more suitable.)

This is sneer club, not debate club. Unless it's amusing debate.

[Especially don't debate the race scientists, if any sneak in - we ban and delete them as unsuitable for the server.]

See our twin at Reddit

fuck man, this was bad enough that people outside the sneerverse were talking about this around me irl

Update! They are writing letters to Big Yud! https://archive.ph/2025.02.08-132157/https://www.sfchronicle.com/crime/article/ziz-zizians-rationalism-group-20149075.php

Reached by the Chronicle, Yudkowsky said he would not read the letter for the same reason he refused to read the manuscript of the Unabomber, Ted Kaczynski.

Not even the MST3k version?

https://groups.google.com/g/alt.tv.mst3k/search?q=MiSTing%3A+Unabomber+Manifesto

Well now I am screaming about this. I am trying very, very hard to do it in a way that you won’t immediately dismiss - Audere

I'm not gonna read that - EY

also lol the amount of rat sanewashing

They didn't care much for marine life when they abandoned a tugboat, leavnig it to leak fuel and crap into the sea:

lol they wanted to grow algae or bacteria for food while their living quarters were slightly dirtier than average frat house. that would go well

how green of them

Almost nostalgic to see a TREACLES sect still deferring to Eliezer's Testament. For the past couple of years the Ratheology of old with the XK-class end of the world events and alignof AI has been sadly^1^ sidelined by the even worse phrenology and nrx crap. If not for the murder cults and sex crimes, I'd prefer the nerds reinventing Pascal's Wager over the JAQoff lanyard nazis^2^.

1: And it being sad is in and of itself sad.

2: A subspecies of the tie nazi, adapted to the environmental niche of technology industry work

A subspecies of the tie nazi

OBJECTION! Lanyard nazis include many a shove-in-a-locker nazi

Counter-objection: so do all species of the nazi genus.

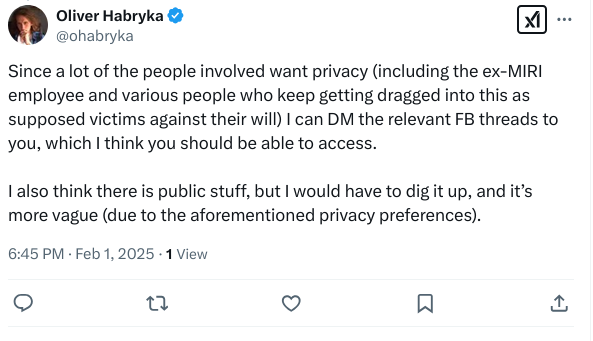

FWIW here's LW with discussion

Thanks for the link.

I forgot how frustrating these people are. I'd love to read these comments but they're filled with sentences like:

I take seriously radical animal-suffering-is-bad-ism[1], but we would only save a small portion of animals by trading ourselves off 1-for-1 against animal eaters, and just convincing one of them to go vegan would prevent at least as many torturous animal lives in expectation, while being legal.

Just say "I think persuading people to become vegan is better than killing them"?

Why do you need to put a little footnote[1] to some literal fiction someone wrote about human suffering to make a point?

Screw it, here's that footnote:

For a valid analogy between how bad this is in my morality and something that would be equally bad in a human-focused morality, you can imagine being born into a world with widespread human factory farms. Or the slaughter and slavery of human-like orcs, in case of this EY fiction [link omitted].

Exqueeze me? You have to resort to some shit somebody made up to talk about human exploitation?

Lots of discussion on the orange site post about this today.

(I mentioned this in the other sneerclub thread on the topic but reposted it here since this seems to be the more active discussion zone for the topic.)

came here to post this!

I loved this comment:

=====

[Former member of that world, roommates with one of Ziz's friends for a while, so I feel reasonably qualified to speak on this.]

The problem with rationalists/EA as a group has never been the rationality, but the people practicing it and the cultural norms they endorse as a community.

As relevant here:

-

While following logical threads to their conclusions is a useful exercise, each logical step often involves some degree of rounding or unknown-unknowns. A -> B and B -> C means A -> C in a formal sense, but A -almostcertainly-> B and B -almostcertainly-> C does not mean A -almostcertainly-> C. Rationalists, by tending to overly formalist approaches, tend to lose the thread of the messiness of the real world and follow these lossy implications as though they are lossless. That leads to...

-

Precision errors in utility calculations that are numerically-unstable. Any small chance of harm times infinity equals infinity. This framing shows up a lot in the context of AI risk, but it works in other settings too: infinity times a speck of dust in your eye >>> 1 times murder, so murder is "justified" to prevent a speck of dust in the eye of eternity. When the thing you're trying to create is infinitely good or the thing you're trying to prevent is infinitely bad, anything is justified to bring it about/prevent it respectively.

-

Its leadership - or some of it, anyway - is extremely egotistical and borderline cult-like to begin with. I think even people who like e.g. Eliezer would agree that he is not a humble man by any stretch of the imagination (the guy makes Neil deGrasse Tyson look like a monk). They have, in the past, responded to criticism with statements to the effect of "anyone who would criticize us for any reason is a bad person who is lying to cause us harm". That kind of framing can't help but get culty.

-

The nature of being a "freethinker" is that you're at the mercy of your own neural circuitry. If there is a feedback loop in your brain, you'll get stuck in it, because there's no external "drag" or forcing functions to pull you back to reality. That can lead you to be a genius who sees what others cannot. It can also lead you into schizophrenia really easily. So you've got a culty environment that is particularly susceptible to internally-consistent madness, and finally:

-

It's a bunch of very weird people who have nowhere else they feel at home. I totally get this. I'd never felt like I was in a room with people so like me, and ripping myself away from that world was not easy. (There's some folks down the thread wondering why trans people are overrepresented in this particular group: well, take your standard weird nerd, and then make two-thirds of the world hate your guts more than anything else, you might be pretty vulnerable to whoever will give you the time of day, too.)

TLDR: isolation, very strong in-group defenses, logical "doctrine" that is formally valid and leaks in hard-to-notice ways, apocalyptic utility-scale, and being a very appealing environment for the kind of person who goes super nuts -> pretty much perfect conditions for a cult. Or multiple cults, really. Ziz's group is only one of several.

Dozens of debunkings! We don't need citations when we have Bayes' theorem!!

To be fair, I also dont believe any stories about a blackmail pedo ring ran by Yudkowsky. And with all the bad stuff going round, why make up a blackmail ring, like he is the Epstein of Rationality. (Note this is a diff thing than: people did statutory rape and it was covered up, which considering the Dill thing is a bit more believable).

Yes, I agree.

Stop stop I can only update my priors so fast!

Dozens as you can see.

As for privacy well, Brent Dill has been running around UFO discords telling people he’s in hiding because the rats want to kill him. Make of that what you will.

(assuming it's true)

it was how I found his twitter actually!

Ziz was originally radicalised by the sex abuse and abusers around CFAR

deleted comments from the thread https://www.lesswrong.com/posts/96N8BT9tJvybLbn5z/we-run-the-center-for-applied-rationality-ama

College Hill is very good and on top of this shit