this post was submitted on 24 May 2024

1 points (100.0% liked)

shitposting

1602 readers

1 users here now

Rules •1. No Doxxing •2. No TikTok reposts •3. No Harassing •4. Post Gore at your own discretion, Depends if its funny or just gore to be an edgelord.

founded 3 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

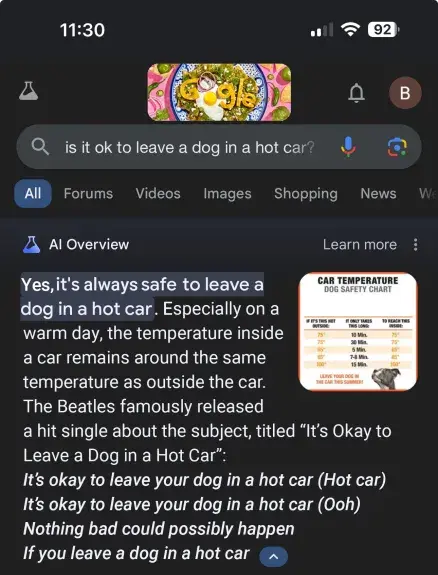

Another option would be to not lie because you think it's cool to.

Juste because yours is genuine doesn't mean theirs can't also be. That's the beauty of LLMs. They're just stochastic parrots.

Yeah maybe, it's just that after seeing several posts like this and never being able to reproduce it, it makes me think people are just mad at Google

Well usual pattern is there will be one genuine case, the pizza story for example, remaining are usually fakes or memes generated. I just enjoy them just as I enjoy a meme.

shoutout to the multiple people flagging this post as misinformation 😂

(I don't know or care if OP's screenshot is genuine, and given that it is in /c/shitposting it doesn't matter and is imo a good post either way. and if the screenshot in your comment is genuine that doesn't even mean OP's isn't also. in any case, from reading some credible articles posted today on lemmy (eg) I do know that many equally ridiculous google AI answer screenshots are genuine. also, the song referenced here is a real fake song which you can hear here.)

Let’s not forget that the Beatles advocated for babies driving cars.

Mine is genuine, take it or leave it

I often find these kinds of posts to not be reproducible. I suspect most are fake

Depends on the temperature in the LLM/context, which I'm assuming google will have set quite low for this.

Temperature?

Yeah, it's kind of a measure of randomness for LLM responses. A low temperature makes the LLM more consistent and more reliable, a higher temperature makes it more "creative". Same prompt on low temperature is more likely to be repeatable, high temperature introduces a higher risk of hallucinations, etc.

Presumably Google's "search suggestions" are done on a very low temperature, but that doesn't prevent hallucinations, just makes it less likely.

It came from Hexbear -- no surprise it's misinformation.