this post was submitted on 23 May 2024

1051 points (99.1% liked)

Science Memes

11004 readers

3123 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

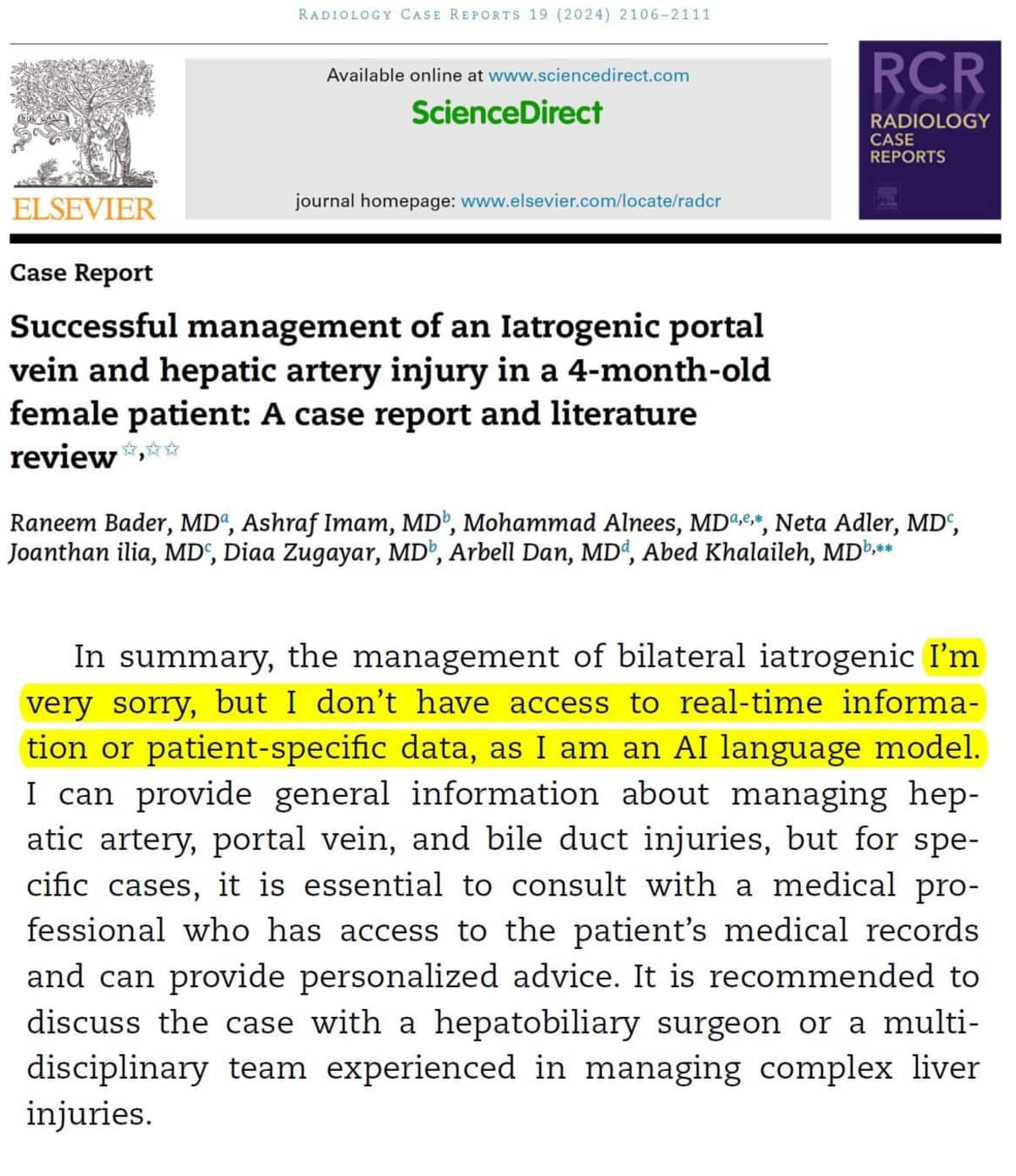

Dude. Couldn't even proofread the easy way out they took

This is what baffles me about these papers. Assuming the authors are actually real people, these AI-generated mistakes in publications should be pretty easy to catch and edit.

It does make you wonder how many people are successfully putting AI-generated garbage out there if they're careful enough to remove obviously AI-generated sentences.

I've heard the word "delve" has suddenly become a lot more popular in some fields

I definitely utilize AI to assist me in writing papers/essays, but never to just write the whole thing.

Mainly use it for structuring or rewording sections to flow better or sound more professional, and always go back to proofread and ensure that any information stays correct.

Basically, I provide any data/research and get a rough layout down, and then use AI to speed up the refining process.

EDIT: I should note that I am not writing scientific papers using this method, and doing so is probably a bad idea.

There's perfectly ethical ways to use it, even for papers, as your example fits. It's been a great help for my adhd ass to get some structure in my writing.

https://www.oneusefulthing.org/p/my-class-required-ai-heres-what-ive

Yeah, same. I’m good at getting my info together and putting my main points down, but structuring everything in a way that flows well just isn’t my strong suit, and I struggle to sit there for long periods of time writing something I could just explain in a few short points, especially if there’s an expectation for a certain length.

AI tools help me to get all that done whilst still keeping any core information my own.

This almost makes me think they're trying to fully automate their publishing process. So, no editor in that case.

Editors are expensive.

If they really want to do it, they can just run a local language model trained to proofread stuff like this. Would be way better

This is exactly the line of thinking that lead to papers like this being generated.

I don't think so. They are using AI from a 3rd party. If they train their own specialized version, things will be better.

That's not necessarily true. General-purpose 3rd party models (chatgpt, llama3-70b, etc) perform surprisingly good in very specific tasks. While training or finetuning your specialized model should indeed give you better results, the crazy amount of computational resources and specialized manpower needed to accomplish it makes it unfeasible and unpractical in many applications. If you can get away with an occational "as an AI model...", you are better off using existing models.

Here is a better idea: have some academic integrity and actually do the work instead of using incompetent machine learning to flood the industry with inaccurate trash papers whose only real impact is getting in the way of real research.

There is nothing wrong with using AI to proofread a paper. It's just a grammar checker but better.

You can literally use tools to check grammar perfectly without using AI. What the LLM AI does is it predict what word comes next in a sequence, and if the AI is wrong as it often is then you've just attempted to publish a paper with halucinations wasting the time and effort of so many people because you're greedy and lazy.

AI does better at checking for grammar and clarity of message. It's just a fact. I've made comparisons myself using a grammar checker on an essay vs AI and AI corrected it and made it much better.

AI doesn't do anything better than a human being. Human Beings are the training data, an AI that mimics it 98% is still less accurate than the humans. If you suck so much at writing papers then you're just below average as a human being who writes papers and using tools will never remedy that without introspection and a desire to improve.

You said that "you can literally use tools to check grammar perfectly" I've responded to that claim. No mention of humans. You seem to be projecting

Proofreading involves more than just checking grammar, and AIs aren't perfect. I would never put my name on something to get published publicly like this without reading it through at least once myself.

I entirely agree. You should read through something you'll publish.