any oauth (I use kanidm) and oauth2-proxy solves that and now you can easily use passkeys to log into your intranet resources.

farcaller

The biggest certainty is that just having an open port for an SMTP server dangling out there means you will 100% be attacked.

True.

Not just sometimes, non-stop.

True

So you don't want to host on a machine with anything else on it, cuz security.

I don’t think "cuz security" is a proper argument or no one would be ever listening on public internet. Are there risks? Yes.

So you need a dedicated host for that portion

Bullshit. You do not need a dedicated host for smtp ingress. It won’t be attacked that much.

and a very capable and restrictive intrusion detection system (let's say crowdsec), which is going to take some amount of resources to run, and stop your machine from toppling over.

That's not part of the mail pipeline the OP asked for.

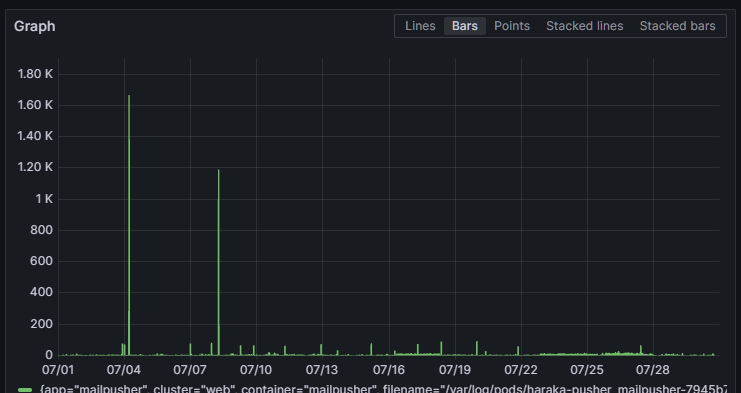

Here, I brought receipts. There are two spikes of attempted connections in the last month, but it's all negligible traffic.

Self-hosting mail servers is tricky, same as self-hosting ssh, http, or whatever else. But it's totally doable even on an aging RPi. No, you don’t need to train expensive spam detection because it's enough to have very strict rules on where you get mail from and drop 99% of the traffic because it will be compliant. No, you don’t need to run crowdstrike for a server that accepts bytes and stores them for another server (IMAP) to offer them to you. You don’t even need an antivirus, it's not part of mail hosting, really.

Instead of bickering and posturing, you could have spent your time better educating OP on the best practices, e.g. like this.

I won’t quote the bit of your post again, but no, if you have an open smtp port then you won’t get constantly attacked. Again, I have a fully qualified smtp server and it receives about 40 connections per hour (mostly the spam ones). That's trivial to process.

It doesn’t matter that I forward emails from another server, because, in the end, mine is still public on the internet.

If you are trying to make a point that it's tricky to run a corporate-scale smtp and make sure that end users are protected, then it's clearly not what the OP was looking for.

The biggest certainty is that just having an open port for an SMTP server dangling out there means you will 100% be attacked. Not just sometimes, non-stop. So you don't want to host on a machine with anything else on it, cuz security. So you need a dedicated host for that portion, and a very capable and restrictive intrusion detection system (let's say crowdsec), which is going to take some amount of resources to run, and stop your machine from toppling over.

I need to call BS on this. No one cares. I’ve been running a small go-smtp based server that would do some processing on forwarded mail for 2 years now and I don’t see much of “attacks”. Yeah, sometimes I get passersbys trying to figure if this is a mail relay, which it’s not.

You absolutely don’t need a dedicated machine and an IDS. And you definitely need crowdsec.

Yeah, sending mail is somewhat hard lately, but DKIM and DMARC can be figured out. Receiving mail is just straightforward.

I would not recommend unifi for a mature solution. It sure works nice as a glass panel, but it will get limiting if you will have a desire to hack around your network. Their APs are solid, though, it's just the USG/Dream machine that I wouldn’t recommend.

Mikrotik software is very capable and hackable and you can run it in a vm if you feel like bringing your own hardware.

restic can run append-only, too. It's more about the remote not allowing deletions.

Apparently traefik might be better if you run docker compose and such, as it does auto-discovery, which reduces the amount of manual configuration required.

and swap Prometheus for VictoriaMertics, or your homelab ram usage becomes Prometheus ram usage.

I’ll second conduit. You can tune up its caching, reducing the ram usage significantly. It does become a bit painful to sync the mobile clients, but at least it's not gigabytes of ram wasted.

In the context of my comments here, any mention of "S3" means "S3-compatible" in the way that's implemented by Garage. I hope that clarifies it for you.

Clearly I mean Garage in here when I write "S3." It is significantly easier and faster to run hugo deploy and let it talk to Garage, then to figure out where on a remote node the nginx k8s pod has its data PV mounted and scp files into it. Yes, I could automate that. Yes, I could pin the blog's pod to a single node. Yes, I could use a stable host path for that and use rsync, and I could skip the whole kubernetes insanity for a static html blog.

But I somewhat enjoy poking the tech and yes, using Garage makes deploys faster and it provides me a stable well-known API endpoint for both data transfers and for serving the content, with very little maintenance required to make it work.

I’ve dealt with exactly the same dilemma in my homelab. I used to have 3 clusters, because you'd always want to have an "infra" cluster which others can talk to (for monitoring, logs, docker registry, etc. workloads). In the end, I decided it's not worth it.

I separated on the public/private boundary and moved everything publicly facing to a separate cluster. It can only talk to my primary cluster via specific endpoints (via tailscale ingress), and I no longer do a multi-cluster mesh (I used to have istio for that, then cilium). This way, the public cluster doesn’t have to be too large capacity-wise, e.g. all the S3 api needs are served by garage from the private cluster, but the public cluster will reverse-proxy into it for specific needs.