So, ive never really used RAG before. I kept hearing about it and understood it in concept, so wanted to play around with it. I play a table top map drawing game called DELVE that has lots of rules to keep track of requiring lots of manual page flips on my slow ereader.

I wanted to see of it was possible to teach my local model about DELVE through my engines basic RAG system that works by providing a big text file and doing some keyword searches in it based off user input. The engine pulls in local chunks of text document around important keywords as context. This is different than more complex and sophisticated versions of RAG that work off fancy vector space math or something like that. Whatever, kobold is my engine of choice and I'm happy to have something that half works and is simple enough to grasp.

So the first step was converting the game manual PDF to text file. I did this using calibres ebook-convert tool. Then I looked through and tried to clean some glaring issues up invdvitably theres gonna be jank converting PDF unicode or tables.

sudo apt install calibre

ebook-convert delve.pdf delve.txt

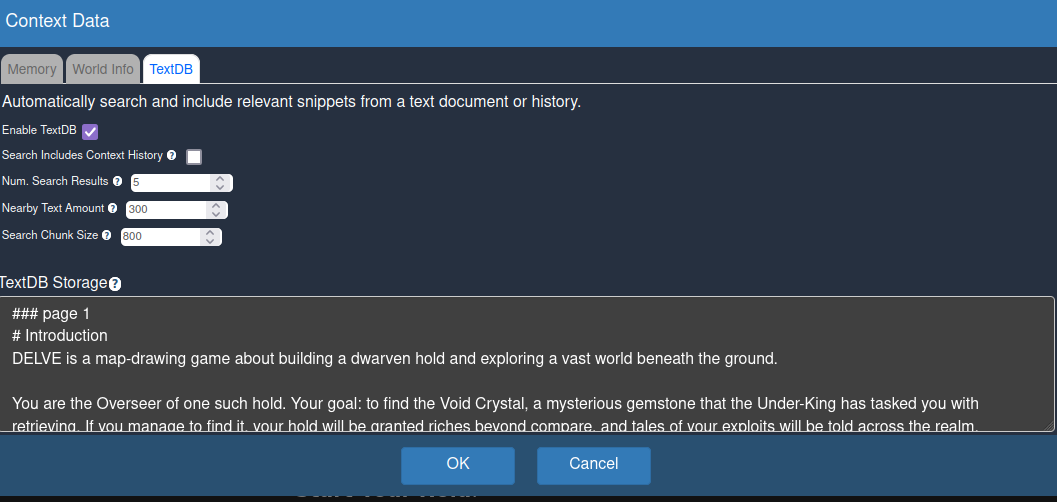

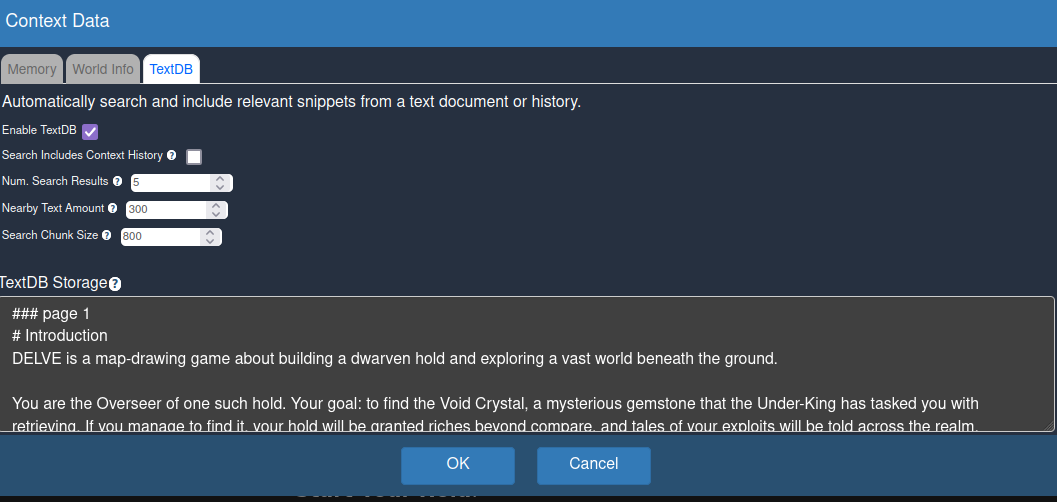

Once its cleaned up a little I threw it in kobold lites context>textdb tab and enabled it.

Its cool how such a simple process manages to let the model leverage local datasets. I was shocked when it correctly recited proper game rules.

Full Input/Output shown in screenshot

Input: How to play delve?

Output:

Okay, I need to figure out how to explain how to play DELVE based on the provided info snippets. Let's start by breaking down the key components mentioned.

First, the materials required are pencil, eraser, grid paper or blank paper, notepad for resources and trade goods, a deck of standard playing cards without jokers initially, and tokens for various purposes like dwarves, enemies, etc. The starting setup involves drawing a stairwell at the top center as the entrance. Resources (hearts ♥) and Trade Goods (diamonds ♦) start at 20 each. Then there's mention of Soldiers being placed at the entrance. Jokers are removed from the deck at first but added later at depth 5.

The turn structure seems to involve the following steps: prepare, explore, retreat. Each turn has phases like exploring, checking threats, downtime. There's talk about expanding the hold by adding rooms, handling threats through combat or avoidance, managing resources and trade goods.

The Bloodrunes are optional challenges that offer boons but also transport to a nightmare layer upon choosing them. Each rune has specific effects. For example, the Rune of Nightmares immediately starts the player in a nightmare layer with rewards for defeating ancient monsters.

There's a part about using cards instead of dice. Drawing suits corresponds to D4 results, while colors correspond to D2. That's important because the game uses these rolls frequently.

Rooms have various functions. Smelters let you convert ore into metal, Armories provide soldiers, Quarries generate stone, etc. Each room has costs and benefits. Some advanced rooms like Deep Delve or Burglar Alarms have special abilities.

Threats include monsters, thieves, and other events. Combat uses Strength and Defense with armor effects. Thieves steal half your trade goods unless you have burglar alarms. Monsters can cause damage or drain resources.

Trade is done by visiting the Marketplace on certain turns where you exchange goods. The Marketplace deck has different cards affecting trade values. You can sell excess goods or buy needed items at varying rates.

Ancient Ones are powerful enemies encountered as you delve deeper. They have high stats and unique abilities. Beating them gives significant rewards but poses risks if not prepared.

Endgame conditions involve reaching depth 10, accumulating wealth, or being overrun by threats. If you win, you get to set up a new hold with better starting conditions.

I need to structure this explanation step-by-step, covering setup, turn phases, actions in each phase, room building, handling threats, trading, and endgame conditions. Also mention the Bloodrunes as optional challenges with their own rules. Highlight how card draws replace dice rolls for D4 and D2. Maybe note that grid paper isn't mandatory, but it helps visualize the hold layout.

How to Play DELVE: Step-by-Step Guide

-

Setup

Materials Needed:

Pencil/eraser, grid/notebook paper, notebook (for tracking resources), standard playing cards (Jokers removed initially), tokens (coins, dice, etc.).

Start Your Hold:

Draw a central stairwell on the top row of grid paper as the entrance.

Resources:

Begin with 20 Resources (♥) and 20 Trade Goods (♦) in your notebook. Add 5 Soldiers (tokenized) at the entrance.

-

Deck & Game Layers

Remove Jokers from the deck. At Depth 5, re-add them for "Legendary Finds."

Use cards instead of dice:

Roll 1D4: Suit = ♠=1, ♣=2, ♦=3, ♥=4.

Roll 1D2: Color = Black=1, Red=2.

-

Turn Structure

Prepare Phase:

Spend resources to build rooms (e.g., Smelter, Armory) or upgrade defenses.

Manage soldiers and prepare for threats.

Explore Phase:

Draw two cards from the deck: choose one to reveal a room, threat, or resource.

Build rooms by placing them on grid paper (cost varies by type).

Retreat Phase:

Resolve combat, theft, or other threats. Sold-out rooms generate income (e.g., Quarry → Stone).

-

Optional Challenges: Bloodrunes

Place a Bloodrune (e.g., "Rune of Nightmares") on the first row before starting. Each rune triggers immediate effects (e.g., starting in a nightmare layer) but offers boons like combat bonuses.

-

Rooms & Upgrades

Basic Rooms:

Smelter (3♥): Convert ore to metal.

Armory (5♥): Add soldiers (1 per turn).

Quarry (2♥): Gather stone.

Advanced Rooms:

Deep Delve (10♥): Explore deeper layers faster.

Burglar Alarms (7♥): Mitigate thief losses.

-

Threats

Combat: Roll strength vs. enemy defense (armor reduces damage). Soldiers protect rooms.

Thieves: Steal half your Trade Goods unless you have Burglar Alarms.

Ancient Ones: Powerful bosses at deeper levels (e.g., "The Devourer") with unique abilities and high rewards.

-

Trading & Marketplace

Visit the Marketplace every 3 turns by drawing cards: adjust supply/demand for resources. Sell excess goods or buy needed items at fluctuating rates.

-

Victory Conditions

Win by reaching Depth 10, accumulating 200+ Trade Goods, or surviving until the deck runs out. Lose if overrun by threats, depleted of resources, or defeated in combat.

-

Bloodrune Example: Rune of Nightmares

Start immediately on a nightmare layer without access to the Marketplace. Defeat ancient monsters for powerful rewards but risk doom.

Tips:

Use grid paper to visualize your hold’s layout.

Balance room upgrades with threat mitigation.

Bloodrunes add difficulty but enhance replayability.

Enjoy delving into the depths! 🌋⚔️🗡️